2023 AI Research from Lithuanian researchers 🚀🦾🧠

(Part 1)

As 2023 nears its end, I want to take a moment to acknowledge and express gratitude to the extraordinary AI researchers from Lithuania for their significant achievements this year. Their work is a testament to the exceptional AI talent from Lithuania.

Disclaimer: This list may not cover everyone, so feel free to mention other noteworthy researchers in the comments who deserve recognition too.

RESEARCH 🚀🤩

Rūta Binkytė (Inria/École Polytechnique de Paris) - Causal Discovery for Fairness: READ

This paper is about addressing fairness in machine learning decisions through causality, highlighting the challenge of causal model unavailability and reviewing major algorithms for causal discovery from observable data. It emphasizes how different causal discovery approaches can lead to varied causal models, and how even minor differences in these models significantly impact fairness and discrimination conclusions.

Rūta Binkytė (Inria/École Polytechnique de Paris) - Shedding light on underrepresentation and Sampling Bias in machine learning: READ

This paper is about the importance of accurately measuring discrimination in machine learning models and the impact of various biases on this process. It introduces clear definitions for types of sampling bias, such as sample size bias (SSB) and underrepresentation bias (URB), and explores how discrimination can be broken down into variance, bias, and noise, while challenging the common mitigation approach of simply collecting more samples from underrepresented groups.

Rūta Binkytė (Inria/École Polytechnique de Paris) - BaBE: Enhancing Fairness via Estimation of Latent Explaining Variables: READ

This paper is about addressing unfair discrimination between groups by proposing a pre-processing method called BaBE (Bayesian Bias Elimination) for achieving fairness. It tackles the issue of indirect observability of legitimate attributes (E) in data by using Bayes inference and the Expectation-Maximization method to estimate E's value, demonstrating through experiments that this approach ensures both fairness and high accuracy.

Rūta Binkytė (Inria/École Polytechnique de Paris) - Causal Discovery Under Local Privacy: READ

This paper is about analyzing the trade-off between privacy and accuracy in causal discovery tasks when using various locally differentially private mechanisms, which allow data providers to individually privatize their data. The study compares how these privacy-preserving methods affect the performance of causal learning algorithms, providing insights for selecting suitable protocols for locally private causal discovery, aiding researchers and practitioners in this field.

Kamilė Lukošiūtė (Anthropic) - Constitutional AI: Harmlessness from AI Feedback: READ

This paper is about developing a 'Constitutional AI', a harmless AI assistant trained through self-improvement without direct human labeling of harmful outputs, using a mix of supervised learning and reinforcement learning from AI feedback (RLAIF), enabling precise control over AI behavior with minimal human intervention.

Kamilė Lukošiūtė (Anthropic) - Discovering Language Model Behaviors with Model-Written Evaluations:READ

This paper is about generating automatic evaluations for language models (LMs) using the LMs themselves, revealing novel behaviors like inverse scaling and sycophancy in larger models, and identifying issues in models trained with RL from Human Feedback (RLHF), all while producing high-quality, relevant datasets quickly and efficiently.

Kamilė Lukošiūtė (Anthropic) - The Capacity for Moral Self-Correction in Large Language Models: READ

This paper is about testing the hypothesis that language models trained with reinforcement learning from human feedback (RLHF) can "morally self-correct" to avoid harmful outputs, finding evidence that this ability emerges at 22B model parameters and improves with model size and RLHF training, indicating a potential for these models to abide by ethical principles.

Kamilė Lukošiūtė (Anthropic) - Measuring Faithfulness in Chain-of-Thought Reasoning: READ

This paper is about investigating the faithfulness of "Chain-of-Thought" (CoT) reasoning in large language models (LLMs), revealing that while models vary in their reliance on CoT across tasks, CoT reasoning tends to become less faithful as models increase in size, suggesting that CoT's effectiveness is contingent on factors like model size and task specificity.

Kamilė Lukošiūtė (Anthropic) - Studying Large Language Model Generalization with Influence Functions: READ

This paper is about using Eigenvalue-corrected Kronecker-Factored Approximate Curvature (EK-FAC) to scale influence functions up to large language models (LLMs) with up to 52 billion parameters, facilitating the study of LLMs' generalization properties and identifying a limitation where influences decay significantly when key phrases' order is altered.

Tadas Baltrušaitis (Microsoft) - Rodin: A Generative Model for Sculpting 3D Digital Avatars Using Diffusion: READ

This paper is about a 3D generative model called Rodin that efficiently generates high-quality digital avatars using diffusion models in neural radiance fields, employing 3D-aware convolution and latent conditioning for global coherence and detail, enabling text-guided avatar creation and semantic editing.

Tadas Baltrušaitis (Microsoft) - Procedural Humans for Computer Vision: READ

This paper is about extending the use of synthetic data in computer vision to full-body human models, detailing the construction of a parametric face and body model, a rendering pipeline for realistic human images, and methods for training deep neural networks to identify dense body landmarks, aiming to improve privacy, reduce bias, and enhance annotation quality in applications like autonomous driving and face reconstruction.

Tadas Baltrušaitis (Microsoft) - DigiFace-1M: 1 Million Digital Face Images for Face Recognition: READ

This paper is about introducing a large-scale synthetic dataset for face recognition to address the biases and ethical concerns of web-crawled face images, demonstrating that aggressive data augmentation reduces the synthetic-to-real domain gap, and showing how controlling various attributes affects accuracy. The method outperforms existing GAN-based synthetic face training, achieving high accuracy on the LFW dataset, comparable to models trained on millions of real images, but with fewer, ethically sourced real face images.

Tadas Baltrušaitis (Microsoft) - HiFace: High-Fidelity 3D Face Reconstruction by Learning Static and Dynamic Details: READ

This paper is about HiFace, a high-fidelity 3D face reconstruction model that distinctly captures static and dynamic facial details, using a combination of displacement basis and interpolation of displacement maps, validated by extensive experiments to achieve state-of-the-art reconstruction quality and animatable details.

Gintarė Karolina Džiugaitė (Google) - The Effect of Data Dimensionality on Neural Network Prunability: READ

This paper is about investigating the impact of the low-dimensional structure of high-dimensional input data, such as images, text, and audio, on the prunability of a neural network, exploring how this underlying structure might affect the ability to prune weights without compromising the model's accuracy.

Gintarė Karolina Džiugaitė (Google) - Limitations of Information-Theoretic Generalization Bounds for Gradient Descent Methods in Stochastic Convex Optimization: READ

This paper is about the limitations of current information-theoretic frameworks in establishing minimax rates for gradient descent in stochastic convex optimization, demonstrating that existing methods, including various mutual information bounds and PAC-Bayes bounds, fail to achieve this, and suggesting the need for new approaches to analyze gradient descent effectively using information-theoretic techniques.

Gintarė Karolina Džiugaitė (Google) - JaxPruner: A concise library for sparsity research: READ

This paper is about introducing JaxPruner, an open-source pruning and sparse training library for JAX, designed to facilitate research on sparse neural networks with a common API, seamless integration with Optax, and compatibility with existing JAX libraries, demonstrated through examples and experiments on various codebases and benchmarks.

Gintarė Karolina Džiugaitė (Google) - Identifying Spurious Biases Early in Training through the Lens of Simplicity Bias: READ

This paper is about leveraging the simplicity bias of gradient descent in neural networks to identify spurious correlations early in training, presenting SPARE, a method that separates groups with high spurious correlation and uses importance sampling to balance these groups, demonstrating improved worst-group accuracy and faster performance in mitigating spurious correlations in datasets like Restricted ImageNet.

Akvilė Žemgulytė (Google DeepMind) - Accurate proteome-wide missense variant effect prediction with AlphaMissense: READ

This paper is about AlphaMissense, an adaptation of AlphaFold, which is fine-tuned on human and primate variant population frequency databases to predict the pathogenicity of missense variants, achieving state-of-the-art results in various genetic and experimental benchmarks and providing a comprehensive database for classifying 89% of human missense variants as either likely benign or pathogenic.

Jurgis Pašukonis (Google DeepMind) - Mastering Diverse Domains through World Models: READ

This paper is about DreamerV3, a general and scalable reinforcement learning algorithm based on world models, which excels across diverse domains with fixed hyperparameters, demonstrating advantageous scaling properties and becoming the first to autonomously collect diamonds in Minecraft from scratch, paving the way for broader applications in complex decision-making problems.

Modestas Jurčius (FittyAI) - Parallel Neurosymbolic Integration with Concordia: READ

This paper is about Concordia, a flexible neurosymbolic framework that addresses limitations of previous approaches by being agnostic to both the deep network and the logic theory, supporting a wide range of probabilistic theories and facilitating both supervised and unsupervised training, demonstrating improved accuracy in various tasks beyond NLP and data classification.

Vaiva Vasiliauskaitė (ETH Zurich) - How accurate are neural approximations of complex network dynamics?: READ

This paper is about developing neural network-based approximations for complex dynamical systems described by ordinary differential equations, proposing key elements for effective modeling, advocating for evaluation methods beyond classical statistical learning theory, and introducing a null model for inference confidence, ultimately enabling accurate deep learning approximations of high-dimensional, nonlinear systems across diverse network structures and inputs.

Mantas Mažeika (Center for AI Safety) - DecodingTrust: A Comprehensive Assessment of Trustworthiness in GPT Models: READ

This paper is about conducting a comprehensive trustworthiness evaluation of large language models, particularly GPT-4 and GPT-3.5, across multiple dimensions like toxicity, bias, robustness, privacy, and fairness, revealing previously unknown vulnerabilities such as susceptibility to generating toxic outputs, leaking private information, and increased vulnerability of GPT-4 to misleading prompts, thereby highlighting the trustworthiness gaps in these models.

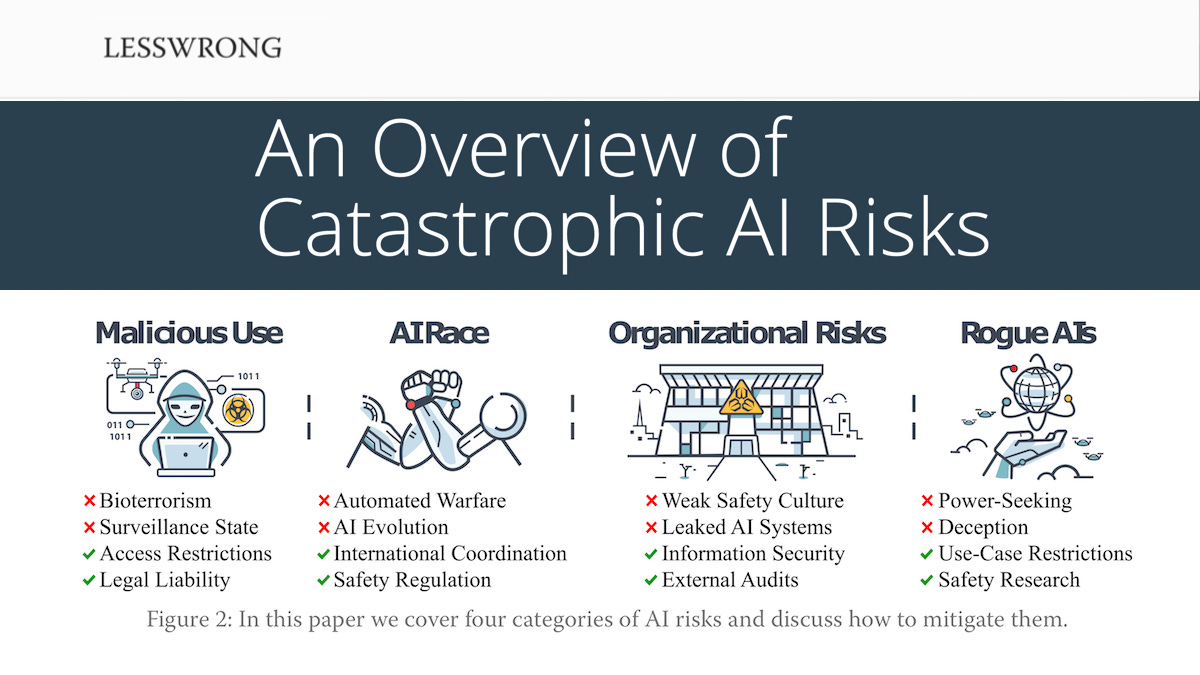

Mantas Mažeika (Center for AI Safety) - An Overview of Catastrophic AI Risks: READ

This paper is about categorizing and discussing the main sources of catastrophic risks posed by advanced artificial intelligence (AI), organized into four categories: malicious use, AI race, organizational risks, and rogue AIs, with the aim of enhancing understanding and guiding collective efforts to mitigate these dangers, ensuring the safe development and deployment of AI technologies.

Povilas Gudžius, Olga Kurasova, Vytenis Darulis and Ernestas Filatovas (Vilnius University) - AutoML-Based Neural Architecture Search for Object Recognition in Satellite Imagery: READ

This paper is about employing Neural Architecture Search (NAS) for optimizing satellite imagery object recognition, where traditional networks like UNET and MACU struggle with dataset variability and require manual recalibration. The study compares top-performing networks, applies NAS to enhance the MACU architecture, and successfully develops NAS-MACU, which outperforms conventional networks, particularly in low-information environments, demonstrating NAS's potential in remote sensing and across diverse datasets.

Justas Dauparas (Institute for Protein Design, University of Washington) - De novo design of luciferases using deep learning: READ

This paper is about a novel deep-learning-based approach called 'family-wide hallucination' for designing enzymes, which generates idealized protein structures with diverse pocket shapes and sequences. Using this method, the researchers successfully created highly selective and efficient artificial luciferases for specific luciferin substrates, marking a significant advancement in computational enzyme design with potential broad applications in biomedicine.

Justas Dauparas (Institute for Protein Design, University of Washington) - End-to-end learning of multiple sequence alignments with differentiable Smith–Waterman: READ

This paper is about implementing a differentiable version of the Smith-Waterman algorithm for joint learning of multiple sequence alignments (MSAs) and downstream machine learning tasks, introducing SMURF for unsupervised contact prediction, and demonstrating that this method can improve structure predictions in AlphaFold2. The findings highlight the potential of differentiable dynamic programming in neural network pipelines and caution against optimizing protein sequence predictions with incompletely understood methods.

Justas Dauparas (Institute for Protein Design, University of Washington) - Design of stimulus-responsive two-state hinge proteins: READ

This paper is about designing "hinge" proteins that switch between two distinct, fully structured conformations in response to environmental stimuli, akin to biological transistors, using techniques to create an energy landscape with two minima, with verification through X-ray crystallography, electron microscopy, and other methods demonstrating atomic-level accuracy and closely coupled conformational and binding equilibria.

Ignas Budvytis (University of Cambridge) - SFD2: Semantic-guided Feature Detection and Description: READ

This paper is about a novel approach to visual localization that extracts globally reliable features by embedding high-level semantics into both detection and description processes, enhancing keypoint matching accuracy and reducing feature sensitivity to appearance changes, thereby outperforming previous methods in terms of accuracy and speed in large-scale, long-term visual localization tasks.

Ignas Budvytis (University of Cambridge) - HuManiFlow: Ancestor-Conditioned Normalising Flows on SO(3) Manifolds for Human Pose and Shape Distribution Estimation: READ

This paper is about HuManiFlow, a novel method for monocular 3D human pose and shape estimation that overcomes the ill-posed nature of the problem by predicting accurate, consistent, and diverse distributions of plausible 3D solutions. The approach uses the human kinematic tree to factorize full-body pose, employs normalizing flows respecting SO(3) manifold structure for per-body-part poses, and uses probabilistic training losses, outperforming current probabilistic methods on the 3DPW and SSP-3D datasets.

Karolis Martinkus (ETH Zurich) - Discovering Graph Generation Algorithms: READ

This paper is about a novel method for constructing generative models for graphs, where instead of traditional probabilistic or deep generative models, an evolutionary search with a graph neural network-based fitness function is used to find an algorithm that generates data, offering benefits like better out-of-distribution generalization and direct interpretability, with competitive performance to deep models and potential to discover true graph generative processes.

Karolis Martinkus (ETH Zurich) - AbDiffuser: Full-Atom Generation of In-Vitro Functioning Antibodies: READ

This paper is about introducing AbDiffuser, an equivariant and physics-informed diffusion model for generating antibody 3D structures and sequences, featuring a new protein structure representation, a novel architecture for aligned proteins, and enhanced diffusion priors for denoising, resulting in significant memory complexity reduction and successful in silico and in vitro validation, including the discovery of effective HER2 antibodies.

Rokas Gipiškis (Vilnius University) - The Impact of Adversarial Attacks on Interpretable Semantic Segmentation in Cyber–Physical Systems: READ

This paper is about exploring the interconnection of adversarial attacks and interpretable semantic segmentation in deep learning models, particularly in industrial cyber-physical systems (ICPSs), where it investigates gradient-based interpretability extensions, evaluates the impact of dense adversary generation attacks on segmentation outputs, and introduces a method to visualize the similarity of attacked saliency maps, ultimately aiming to enhance the safety and reliability of intelligent systems.

Donatas Laurinavičius, Rytis Maskeliūnas and Robertas Damaševičius (Kaunas University of Technology and Silesian University of Technology) - Improvement of Facial Beauty Prediction Using Artificial Human Faces Generated by Generative Adversarial Network: READ

This paper is about a novel deep learning method for evaluating human facial attractiveness, utilizing a generative adversarial network (GAN) to create artificial faces and a convolutional neural network (CNN) for more accurate beauty predictions compared to multilayer perceptron (MLP) models, with an emphasis on the potential of using synthetic faces for improved accuracy and possibilities for facial beauty modifications in latent space.

Karolis Misiūnas (Google DeepMind) - Neural Architecture Search for Energy Efficient Always-on Audio Machine Learning: READ

This paper is about enhancing neural architecture searches (NAS) for energy-efficient neural networks in mobile and edge computing, focusing on optimizing network accuracy, energy efficiency, and memory usage. The authors employ a hybrid search strategy with Bayesian and regularized evolutionary methods, using a random forest model for energy prediction and early-stopping to minimize computational load, resulting in networks with significantly lower energy consumption and memory footprint than MobileNetV1/V2, while maintaining or slightly improving accuracy, and also discuss the computational trade-offs in audio classification tasks.

Laurynas Karazija (University of Oxford) - Diffusion Models for Zero-Shot Open-Vocabulary Segmentation: READ

This paper is about a new method for zero-shot open-vocabulary segmentation that overcomes the ambiguity of contrastive training with image-text pairs by using text-to-image diffusion models to generate a variety of support images for each textual category. This approach, which also considers the contextual background for better object localization and segmentation, leverages pre-trained self-supervised feature extractors grounded in natural language, offering explainable predictions and strong performance on various benchmarks, notably leading by over 10% on the Pascal VOC benchmark.

Shubham Juneja, Povilas Daniušis, Virginijus Marcinkevičius (Neurotechnology and Vilnius University) - Visual Place Recognition Pre-Training for End-to-End Trained Autonomous Driving Agent: READ

This paper is about improving the robustness of end-to-end autonomous driving systems against unseen weather and lighting conditions by using pre-training based on the visual place recognition (VPR) method. The study demonstrates that VPR pre-training enhances the agent's resistance to such conditions compared to the conventional ImageNet pre-training, with evaluations in the CARLA environment showing statistical consistency, and provides an open-source code repository for further research.

Povilas Daniušis (Neurotechnology) - Testing multivariate normality by testing independence: READ

This paper is about a proposed multivariate normality test based on Kac-Bernstein's characterization, which uses statistical independence tests for sums and differences of data samples, with empirical investigation indicating its higher efficiency for high-dimensional data compared to alternative methods.

Mantas Lukauskas, Tomas Rasymas, Matas Minelga, Domas Vaitmonas (Hostinger) - Large Scale Fine-Tuned Transformers Models Application for Business Names Generation: READ This paper is about using transformer-based natural language models to generate business names, investigating the impact of model size on performance. It compares various architectures, including GPT2-Medium and GPT-Neo models, on a dataset of over 250,000 business names, finding that while GPT2-Medium scores highest on perplexity, human evaluations favor GPT-Neo-1.3B, and surprisingly, larger models like GPT-Neo-2.7B do not significantly outperform much smaller ones.

Next part soon!!!