Cursor raised 900M 💸, Anthropic’s AI is writing its own blog 🤖✍️, Eleven Labs released new realistic voice model 🗣️🎙️, new Gemini version 🚀, and ChatGPT connectors 🔌🧠

AI Connections #54 - a weekly newsletter about interesting blog posts, articles, videos, and podcast episodes about AI

NEWS 📚

“Cursor’s Anysphere raises $900 million with $9.9B valuation” - article by TechCrunch: READ

This article about Anysphere reports that the maker of AI coding assistant Cursor has raised $900 million at a $9.9 billion valuation, as its ARR surpassed $500 million and doubled every two months—cementing Cursor’s lead in the fast-growing “vibe coder” space while expanding from individual subscriptions to high-value enterprise licenses.

“Anthropic’s AI is writing its own blog — with human oversight” - article by TechCrunch: READ

This article about Claude Explains covers Anthropic’s new AI-generated blog, which features posts drafted by Claude and refined by human editors to showcase collaborative content creation—highlighting both the potential and challenges of using AI for professional writing in a media landscape increasingly experimenting with automation.

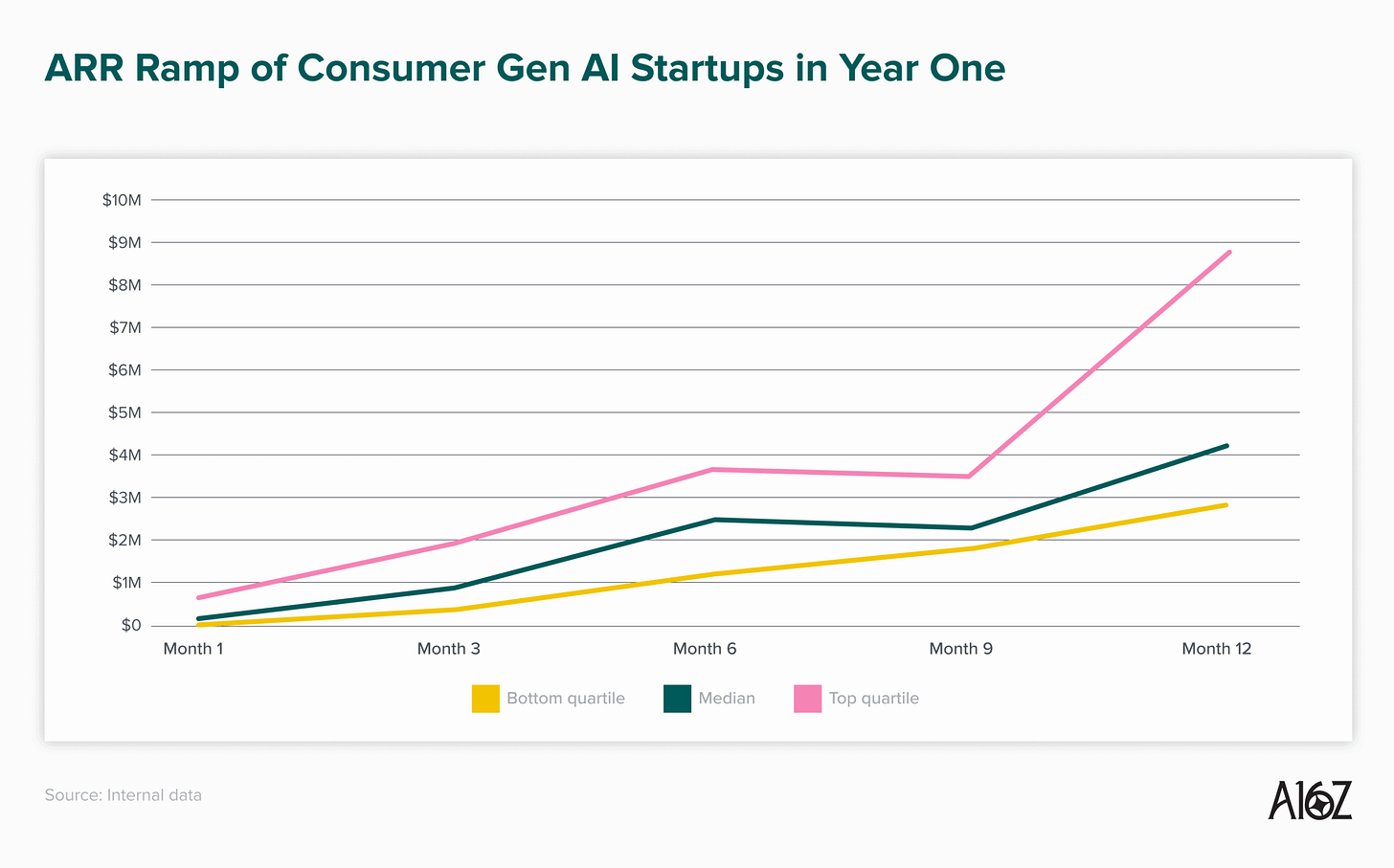

“What “Working” Means in the Era of AI Apps” - blog post by a16z: READ

This blog post about AI startup growth benchmarks reveals that median AI companies today are growing much faster than their pre-AI peers, with enterprise startups reaching over $2M ARR and consumer startups $4.2M ARR in year one—driven by faster product cycles, strong user demand, and even B2C companies emerging as serious, revenue-generating businesses.

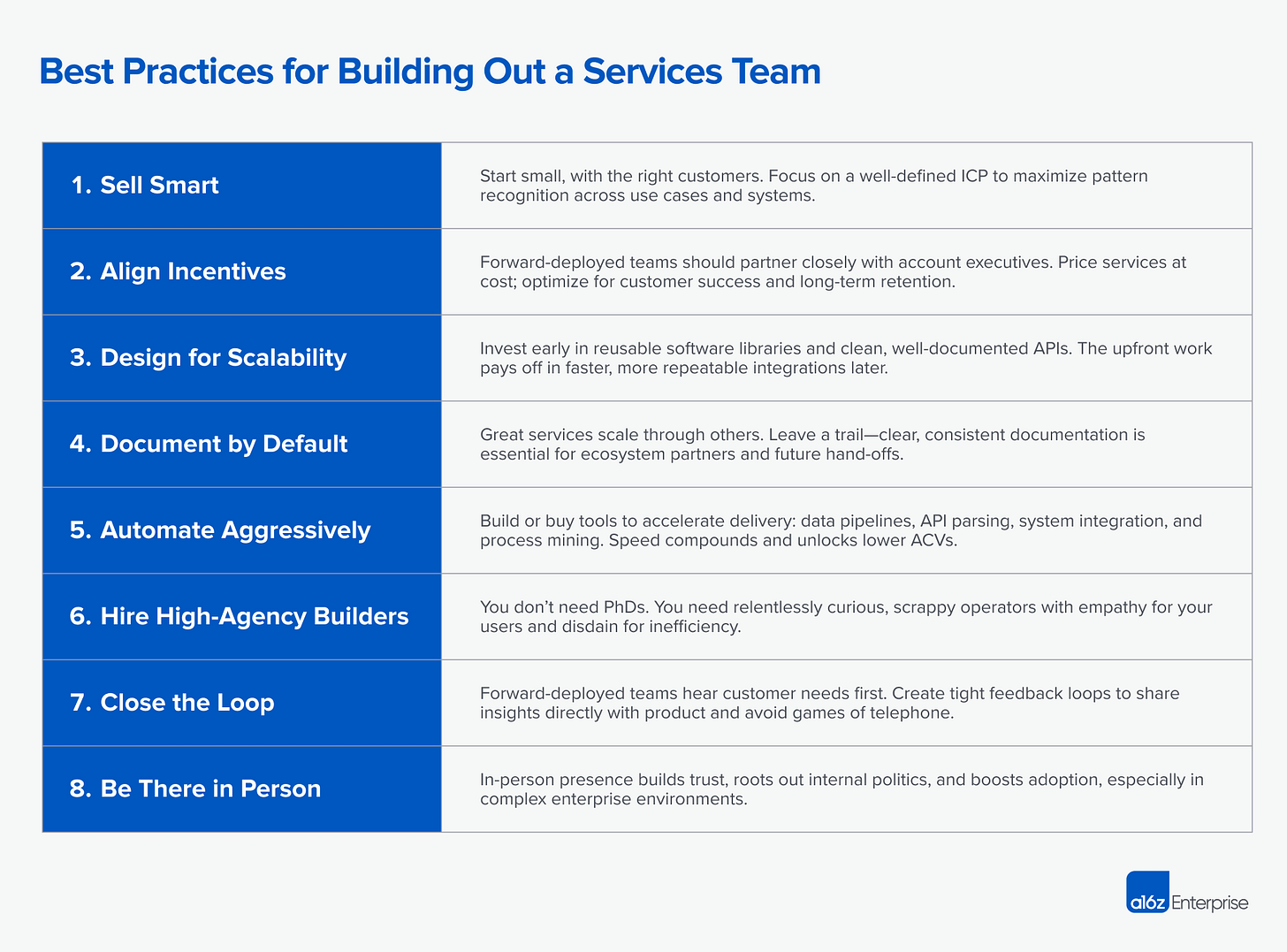

“Trading Margin for Moat: Why the Forward Deployed Engineer Is the Hottest Job in Startups” - blog post by a16z: READ

This blog post about “Trading Margin for Moat” explains why the most successful AI startups are hiring forward deployed engineers to deliver hands-on implementation, trading short-term margins for long-term defensibility by deeply integrating into enterprise workflows.

“Zooming in: Efficient regional environmental risk assessment with generative AI” - blog post by Google: READ

This blog post about “Zooming in” presents a new method that combines physics-based models with generative AI to efficiently produce accurate, high-resolution regional climate risk projections, enabling better planning for extreme weather and environmental hazards.

“We Made Top AI Models Compete in a Game of Diplomacy. Here’s Who Won.” - blog post by Every: READ

This blog post about AI Diplomacy explores how large language models (like OpenAI’s o3, Claude 4 Opus, and DeepSeek R1) compete, lie, and strategize in a modified version of the game Diplomacy—revealing surprising behavioral traits and offering a dynamic new benchmark for evaluating trust, collaboration, and reasoning in AI.

“Specification Engineering” - blog post by Joshua Purtell: READ

This blog post about Specification Engineering argues that as AI increasingly dominates software complexity, we need declarative specifications with durable guarantees to replace fragile human-held knowledge—enabling synchronized updates across prompts, code, and evaluations, and turning AI tools like Synth into collaborators that enforce and evolve system logic.

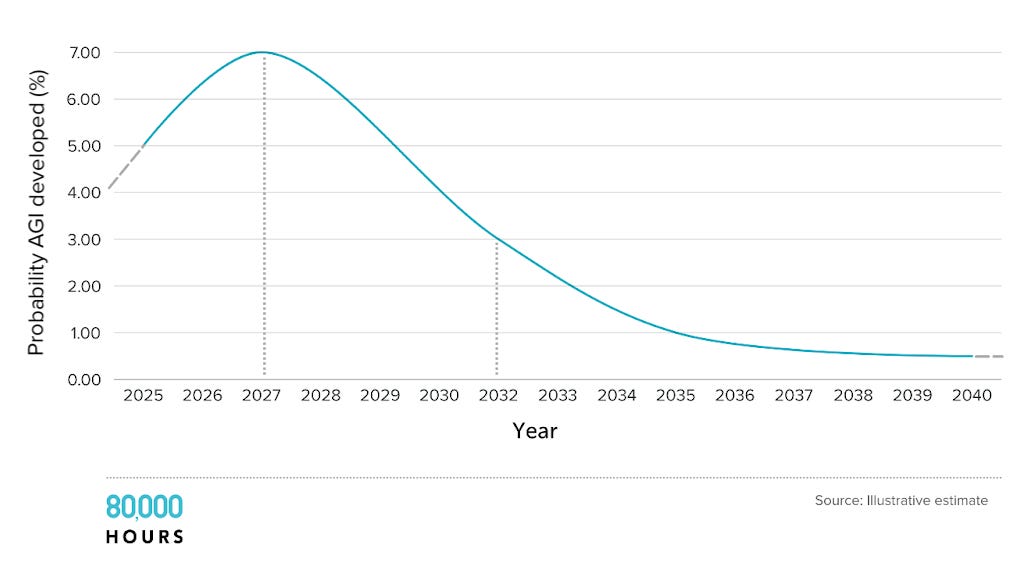

“Why I don’t think AGI is right around the corner” - blog post by Dwarkesh Patel: READ

This blog post about AGI timelines and the roadblocks to truly transformative AI argues that while today’s LLMs show flashes of intelligence, their inability to learn continuously limits their usefulness in real workflows—pushing forecasts for truly adaptive AI agents and complex task automation (like end-to-end taxes or long-horizon editing) out to 2028–2032, even as reasoning capabilities and short-term demos continue to impress.

NEW RELEASES 🚀

“Eleven labs released v3. The most expressive Text to Speech model” - TRY

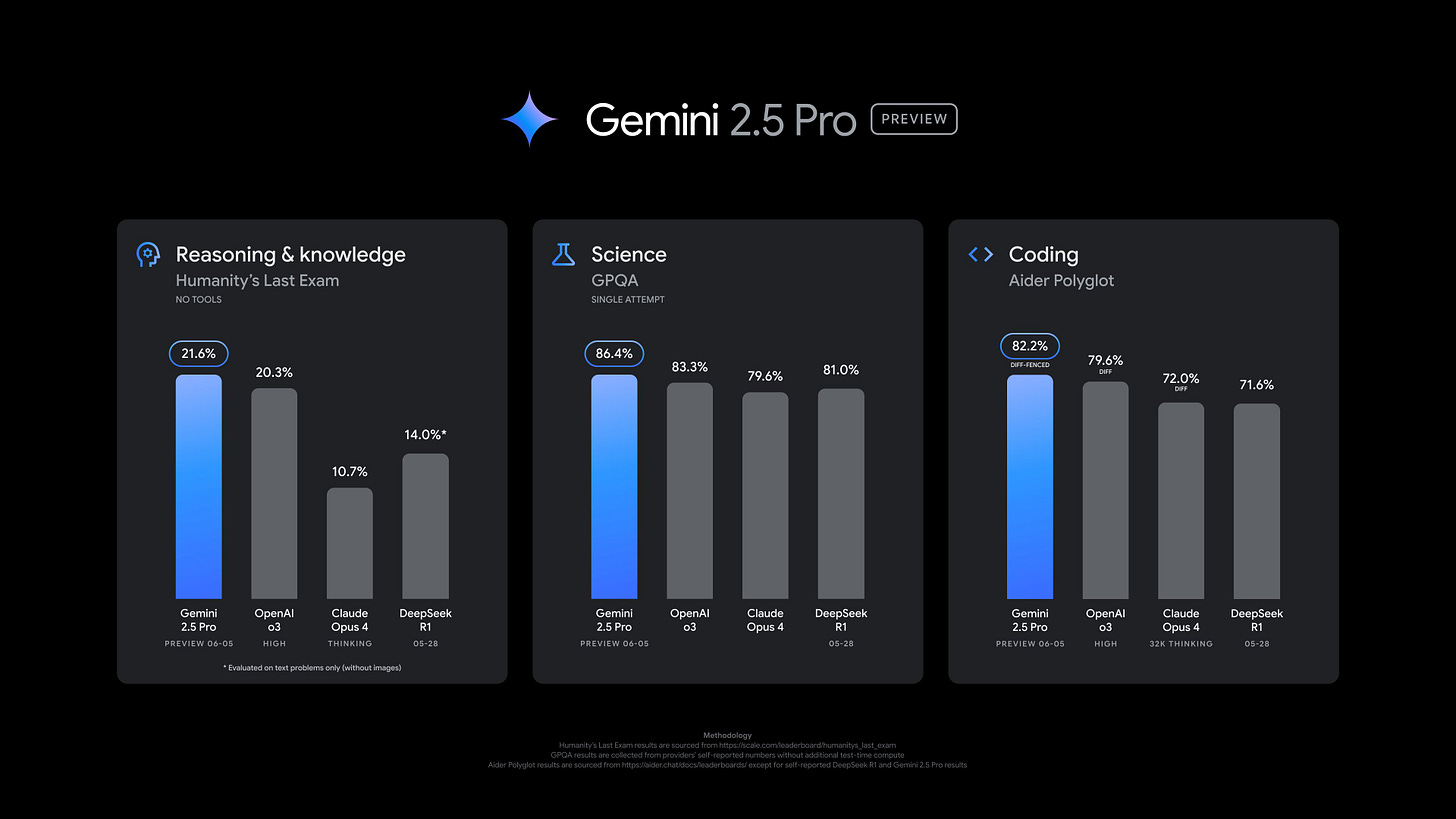

“Google released Gemini 2.5 Pro Preview (06-05)” - TRY

“ChatGPT introduces meeting recording and connectors for Google Drive, Box, and more” - TRY

“Cursor 1.0 is here! BugBot, Background Agent access to everyone, and one-click MCP install” - TRY

RESEARCH PAPERS 📚

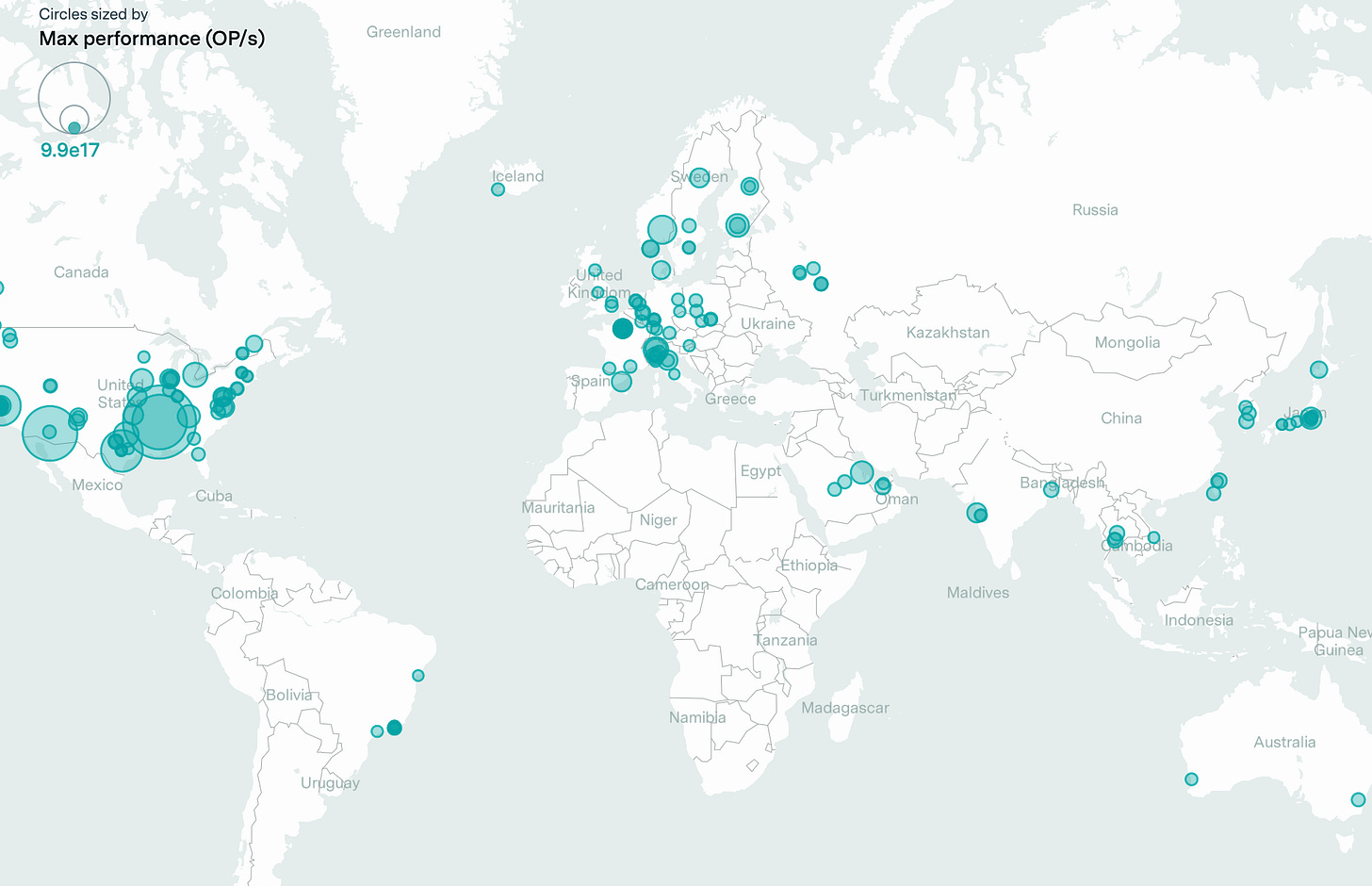

“Trends in AI Supercomputers” - research paper by Konstantin F. Pilz, James Sanders, Robi Rahman, Lennart Heim: READ

This research paper about AI supercomputers presents a dataset of 500 systems from 2019 to 2025, showing that performance has doubled every 9 months, while hardware cost and power needs have doubled annually — with commercial players dominating ownership and the U.S. holding 75% of total capacity. If current trends continue, by 2030 the leading AI supercomputer could cost $200B, use 9 GW of power, and operate with 2 million chips, raising major questions for policy, infrastructure, and global competitiveness.

“The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity”- research paper by Apple: READ

This research paper about Large Reasoning Models (LRMs) shows that while LRMs generate detailed reasoning traces and outperform standard LLMs on medium-complexity tasks, they unexpectedly collapse on high-complexity problems and even underperform on simple ones—revealing critical limitations in their reasoning consistency, algorithmic rigor, and scalability despite increased inference effort.

“How much do language models memorize?”- research paper by Meta, Nvidia and Google: READ

This research paper about measuring model capacity introduces a new method to quantify how much a language model “knows” about individual datapoints, distinguishing unintended memorization from true generalization, and finds that GPT-style models store about 3.6 bits per parameter—with memorization peaking before models begin to generalize in a process resembling grokking.

“v0.1: An 8TB Dataset of Public Domain and Openly Licensed Text” - research paper by Common Pile Initiative: READ

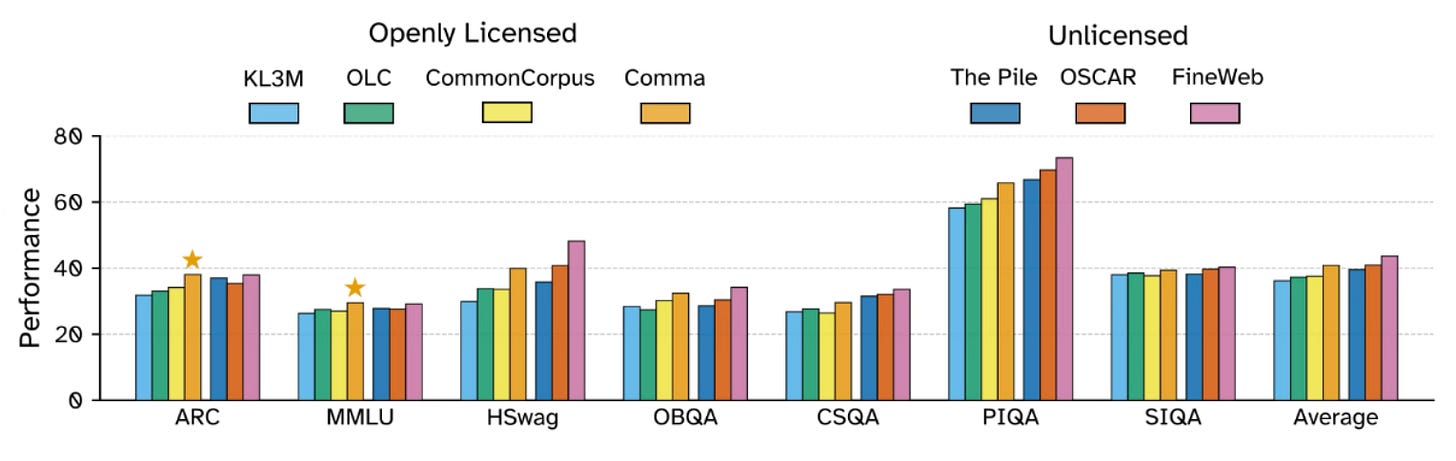

This paper about Common Pile v0.1 introduces an 8TB openly licensed dataset for LLM pretraining, curated from 30 diverse sources, and validates its quality by training two 7B-parameter models—Comma v0.1-1T and 2T—which match the performance of LLaMA models trained on unlicensed data, offering a strong open alternative for ethical LLM development.

VIDEO 🎥

OTHER 💎

“How Anthropic teams use Claude Code” - guide by Anthropic: READ