🎉 Happy 2024!!! 🚀 AI predictions for 2024, 🎤 Yann LeCun interview, 📚 Copyright Doesn’t Apply To AI Training in Japan, 🏗️ Mamba architecture analysis and 🎧 new podcast AI library

AI Connections #46 - a weekly newsletter about interesting blog posts, articles, videos, and podcast episodes about AI

MY 2024 PREDICTIONS

2023 was an impressive year for AI; we have some major news every week. So, I was pretty interested in what could happen in 2024. I have 10 predictions of 2024:

1. Hollywood-quality video generation

In 2024, we can expect text-to-video platforms to start generating HD-quality videos from text.

Tools you can try:

2. AI-generated music will be in the Spotify charts

We already see amazing AI-generated music using the voices of popular singers like Drake, Rihanna, and Weeknd.

In 2024, we will see more legal music from popular singers in the top charts.

Tools you can try:

3. Small LLMs will be available on smartphones

Right now, most mobile apps that use AI models are deployed on the cloud, which causes some latency and price issues. Running models AI models directly on the phone could be a game changer for a lot of applications like

Self-driving cars:

FlowPolot is an open-source fork of comma.ai OpenPilot developed by Mankaran32 and the team.

We already have some news from Apple that they want to run AI models on the iPhones, not the cloud. Article by Arstechnica: READ

4. Open-sourced models reach GPT-4 level

Every week, we have some new, impressive open-source models that are added to Open LLM Leaderboard by Hugging Face.

Mistral already released a model, Mixtral 8x7B, that, in most of the benchmarks (tasks that the model needs to do), is better than ChatGPT's first model (GPT-3.5).

CEO of Mistral said that in 2024, they would have a model similar to GPT-4, but we also forgot that there is a high chance that Meta released Llama 3 which will be better than GPT-4.

5. First reliable AI agents in action

AI agent was started in 2023 with BabyAGI and AutoGPT, but the main problem with this model is that they are not reliable and expensive.

In 2024, we can expect that we will see the first reliable AI agent for simple assistant (emails, booking, analysis) tasks.

Tools you can try:

6. Synthetic data will help create new-level AI models

There is a high chance that we will reach the end of quality data and some predict that this could happen in 2025. How to solve this use model like GPT-4 or Stable Diffusion to generate more data for next level models.

Microsoft already did this and showed that even 5x smaller models like Phi 1.5 could outperform bigger models using high-quality synthetic data. More information here: READ

Also, for images, there is a new algorithm based on diffusion models that could generate thousands of high-quality pictures in just a couple of minutes.

Synthetic data will be used more and more because of legal and improvement processes.

7. Multilingual Large Language models

Large language models like GPT-4, Gemini, or Llama have become world-changing technology, but the problem is that these good results are only in English languages. This creates a huge gap between people who know English and don’t know this language.

In 2024, we will see more Large language models that can talk and write in many different languages. One of these projects, Cohere for AI AYA, is helping to achieve this goal. You can read more here. We are planning to release the AYA dataset in a model very soon.

8. Realistic AI Avatars

After the Generative AI boom next AI boom will be Interactive AI, which means that we want to interact with Large language models using voice and Avatars.

In 2024, we will see better and more realistic avatar technologies that change many different industries and also make deepfake better, so we need to be careful.

Tools you can try:

9. AI Influencers boom

AI influencers are already making five figures in salaries on social media with successful projects like:

3M followers on Instagram

245k followers on Instagram

AI influencers will never get tired, and they will always be available and can travel anywhere with a click of a button. We will see more of them in 2024.

10. AI everywhere

In 2024, AI will be everywhere, and if you want to know more:

READ 📚

“2023: A Year of Groundbreaking Advances in AI and Computing” blog post by Google DeepMind: READ

This blog post is about reflecting on the significant progress made in AI research and applications throughout 2023, reiterating Google Research's and Google DeepMind's commitment to developing and deploying AI innovations responsibly and ethically, grounded in human values, and emphasizing the importance of collaborative efforts in harnessing AI's potential to benefit society while mitigating risks.

“How Not to Be Stupid About AI, With Yann LeCun” article by Wired: READ

This article is about an interview with Yann LeCun, Meta's chief AI scientist and a pioneer of modern AI, discussing his optimistic stance on AI's future, opposition to dystopian narratives, advocacy for open-source AI, and his influential role in AI development, including his work at Meta and NYU, and his contribution to launching Meta's open source large language model, Llama 2, while addressing concerns about potential misuse of open-source AI and his belief in humanity's ability to manage these risks.

“Governing AI for Humanity” report by United Nations: READ

This report is about the UN Secretary-General's AI Advisory Body's Interim Report, "Governing AI for Humanity," which advocates for aligning AI development with international norms, proposes strengthening international governance of AI through seven key functions, including risk assessment and global collaboration on data and resources to achieve Sustainable Development Goals (SDGs), and emphasizes the need for enhanced accountability and equitable representation of all countries.

“Japan Goes All In: Copyright Doesn’t Apply To AI Training” article by BIIA: READ

This article is about Japan's decision not to enforce copyrights on data used in AI training, aiming to boost its AI technology sector and compete globally, a move that has sparked debate between the creative community and business and academic sectors in Japan, with implications for international AI regulation and data sharing, especially in relation to Western literary resources and Japan's rich anime content.

“Three Predictions for the New Year: Biden’s Executive Order on Safe, Secure and Trustworthy AI in 2024” blog post by Weights & Biases: READ

This blog post is about how Biden's recent Executive Order, combined with significant international regulatory changes like the EU AI Act, is expected to bring increased scrutiny and the need for greater accountability in machine learning practices in 2024, impacting various industries, especially those dealing with sensitive data such as defense, critical infrastructure, and healthcare.

“Stuff we figured out about AI in 2023” blog post by Simon Willison: READ

“Structured State Spaces for Sequence Modeling (S4)” blog post by Stanford University: READ

In this series of blog posts, we introduce the Structured State Space sequence model (S4). In this first post we discuss the motivating setting of continuous time series, i.e. sequence data sampled from an underlying continuous process, which is characterized by being smooth and very long. We briefly introduce the S4 model and overview the subsequent blog posts.

“Andreessen Horowitz on digital health funding in 2024 and AI regulation” article by Mobi Health News: READ

READ (RESEARCH PAPERS) 📚

“LLaMA Beyond English: An Empirical Study on Language Capability Transfer” research paper by Fudan University: READ

This research paper investigates the transfer of capabilities from large language models (LLMs) like LLaMA, predominantly pretrained on English, to non-English languages, through extensive empirical research involving vocabulary extension, further pretraining, and instruction tuning, employing various benchmarks to assess model knowledge and response quality, and demonstrating that comparable performance can be achieved with significantly less pretraining data, offering insights for the development of non-English LLMs.

“DocLLM: A layout-aware generative language model for multimodal document understanding” research paper by JPMorgan AI Research: READ

This research paper introduces DocLLM, a novel extension to large language models (LLMs) tailored for visual documents, leveraging textual semantics and spatial layout through bounding box information instead of image encoders, employing disentangled attention matrices and a unique pre-training objective for infilling text, resulting in superior performance on core document intelligence tasks compared to state-of-the-art LLMs, and demonstrating strong generalization to unseen datasets.

“Improving Text Embeddings with Large Language Models” research paper by Microsoft: READ

This research paper presents a straightforward method to generate high-quality text embeddings using synthetic data generated by proprietary LLMs and less than 1k training steps, avoiding complex training pipelines and the limitations of manually collected datasets, by fine-tuning open-source decoder-only LLMs with standard contrastive loss, achieving strong performance on text embedding benchmarks without labeled data and setting new state-of-the-art results when fine-tuned with a mix of synthetic and labeled data.

“Self-Play Fine-Tuning Converts Weak Language Models to Strong Language Models” research paper by Zixiang Chen and team: READ

This research paper introduces Self-Play fine-tuning (SPIN), a novel fine-tuning method for Large Language Models (LLMs) that enhances model performance without additional human-annotated data, by employing a self-play mechanism where the LLM generates and refines its own training data, progressively improving its alignment with the target data distribution, and demonstrating significant performance improvements on various benchmarks, suggesting the potential of self-play in achieving human-level LLM performance without expert input.

“LLM in a flash: Efficient Large Language Model Inference with Limited Memory” research paper by Apple: READ

This research paper addresses the challenge of efficiently running large language models (LLMs) on devices with limited DRAM by storing parameters on flash memory and optimizing data transfer through techniques like "windowing" and "row-column bundling," enabling the operation of models up to twice the size of available DRAM and significantly increasing inference speed, thereby facilitating effective LLM inference on memory-constrained devices.

“MosaicBERT: A Bidirectional Encoder Optimized for Fast Pretraining” research paper by MosaicML: READ

This research paper introduces MosaicBERT, an optimized BERT-style encoder architecture for fast pretraining, incorporating advanced elements like FlashAttention, ALiBi, GLU, dynamic token removal, and low precision LayerNorm, along with an efficient training recipe, achieving high performance on the GLUE benchmark with significantly reduced pretraining time and cost, thus enabling cost-effective custom pretraining of BERT-style models, with model weights and code made open source.

“Beyond Chinchilla-Optimal: Accounting for Inference in Language Model Scaling” research paper by MosaicML: READ

Large language model (LLM) scaling laws are empirical formulas that estimate changes in model quality as a result of increasing parameter count and training data. However, these formulas, including the popular DeepMind Chinchilla scaling laws, neglect to include the cost of inference. We modify the Chinchilla scaling laws to calculate the optimal LLM parameter count and pre-training data size to train and deploy a model of a given quality and inference demand. We conduct our analysis both in terms of a compute budget and real-world costs and find that LLM researchers expecting reasonably large inference demand (~1B requests) should train models smaller and longer than Chinchilla-optimal.

“Ferret: Refer and Ground Anything Anywhere at Any Granularity” research paper by Apple: READ

This research paper introduces MosaicBERT, an optimized BERT-style encoder architecture for fast pretraining, incorporating advanced elements like FlashAttention, ALiBi, GLU, dynamic token removal, and low precision LayerNorm, along with an efficient training recipe, achieving high performance on the GLUE benchmark with significantly reduced pretraining time and cost, thus enabling cost-effective custom pretraining of BERT-style models, with model weights and code made open source.

“AI capabilities can be significantly improved without expensive retraining” research paper by Tom Davidson and team: READ

This research paper discusses the significant improvement of state-of-the-art AI systems through "post-training enhancements" like fine-tuning for web browser use, categorizing them into tool-use, prompting methods, scaffolding, solution selection, and data generation, and introduces a "compute-equivalent gain" metric to compare enhancements, revealing that most enhancements offer benefits exceeding a 5x increase in training compute, some even more than 20x, with development costs typically less than 1% of original training costs, but highlights the challenge in governing such enhancements due to the wide range of potential developers.

WATCH🎥

LEARN 📚

“Transformers United 2023” course by Stanford University: LEARN

“Grokking Deep Learning” book by Andrew Trask: LEARN

AI TOOLS 💎

Numerous.ai AI tool for your excel: TRY

Increase the quality of your old pictures using this code: TRY

“Assistive Video” new tool for text-to-video generation: TRY

COOL THINGS 😎

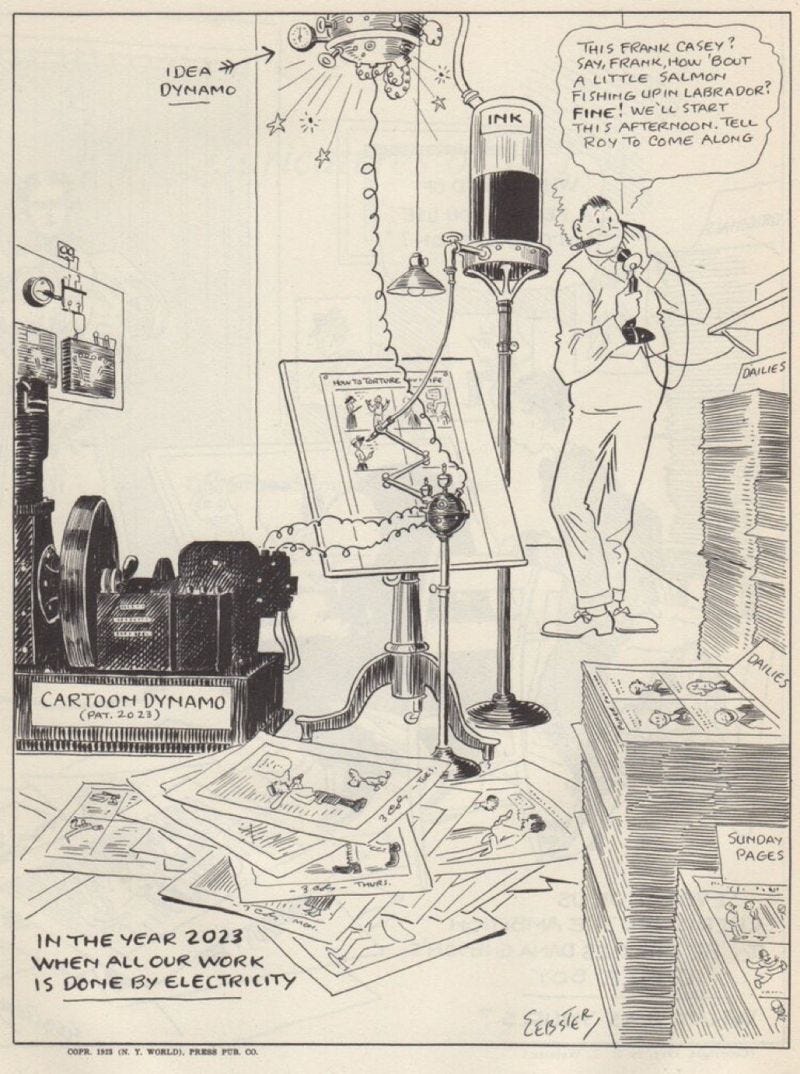

Art from 1928, how people imagine image generation using robots

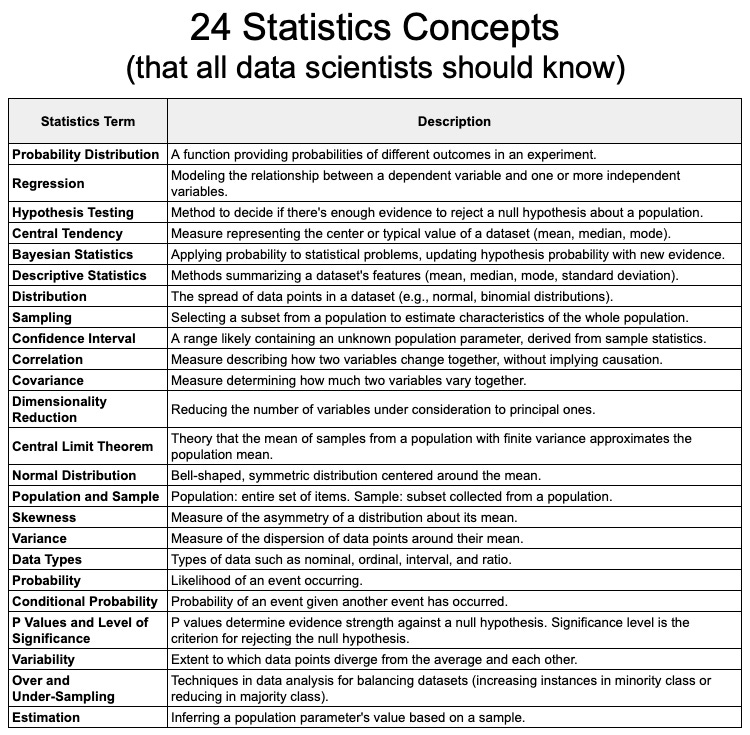

24 main statistics concepts for every data scientist

Midjourney V6 Image prompting and Leonardo motion Video. Credits: Alin_Reaper05