Ilya Sutskever’s Reading List

Ilya Sutskever, co-founder of OpenAI and one of the minds behind GPT, curated a list of 30 essential AI papers—calling them the core 90% of what truly matters in the field today.

Reading List

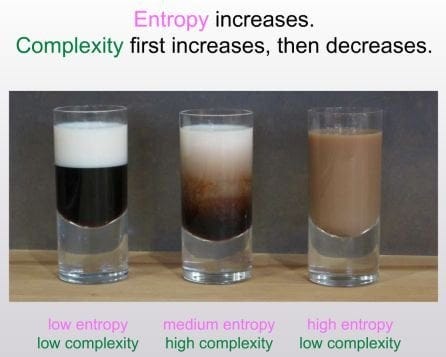

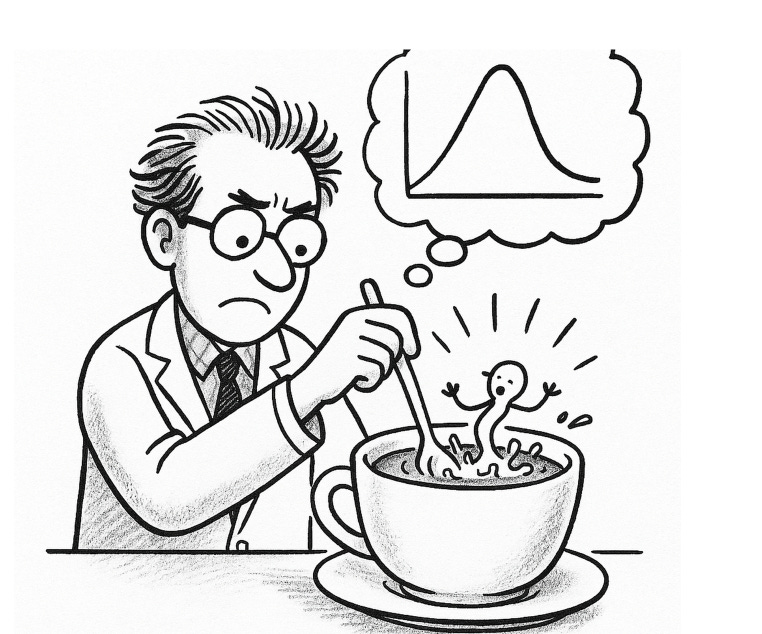

The First Law of Complexodynamics

Scott Aaronson explores why things in the universe often get more complex over time, peak in complexity, and then become simpler again. He proposes a new idea called complextropy, which tries to measure how hard it is to describe a system using a short computer program. This builds on a concept called Kolmogorov complexity, and suggests that complexity is highest in the middle of a system’s life. Because complextropy is hard to measure exactly, he uses file compression as a rough way to test the idea in practice.

The Unreasonable Effectiveness of Recurrent Neural Networks

Andrej Karpathy shows how Recurrent Neural Networks (RNNs) can learn to generate surprisingly realistic text just by reading lots of examples. RNNs work by remembering previous inputs, making them great for handling sequences like language. Karpathy also introduces LSTMs, a more advanced version that fixes some common problems. His article makes RNNs feel both powerful and accessible, encouraging readers to try training their own.

Understanding LSTM Networks

Christopher Olah explains how LSTMs solve a major problem with RNNs: forgetting important information in long sequences. LSTMs use a memory cell and three gates to decide what to remember, forget, and output. This helps them handle tasks like translating sentences or recognizing speech, where past context really matters. Olah also walks through diagrams and explains related ideas like GRUs and attention to make the topic easier to grasp.

Recurrent Neural Network Regularization

Zaremba, Sutskever, and Vinyals propose a better way to use dropout (a regularization method) with LSTMs. Regular dropout doesn’t work well for RNNs, so they apply it only to non-recurrent parts of the model. This tweak makes LSTMs perform better on tasks like language modeling, speech recognition, and machine translation. It helps the model generalize better and avoid overfitting to the training data.

Keeping Neural Networks Simple by Minimizing the Description Length of the Weights

Hinton and van Camp suggest reducing overfitting by keeping neural networks simple. They add small amounts of noise to the model’s weights during training, which forces it to use simpler, less precise values. This idea is based on the Minimum Description Length principle: the best model is the one that explains the data with the fewest bits. Their approach leads to better generalization, especially when there’s not much training data.

Pointer Networks

Vinyals, Fortunato, and Jaitly introduce Pointer Networks, a type of model that picks outputs directly from the input, rather than from a fixed list. This makes it great for problems like sorting or solving the Travelling Salesman Problem, where the output size can change. Pointer Networks use attention to "point" at parts of the input and choose them as the output. The model learns to solve these problems from data and performs better than traditional models.

ImageNet Classification with Deep Convolutional Neural Networks

Krizhevsky, Sutskever, and Hinton built a deep convolutional neural network (CNN) that massively improved image classification on the ImageNet dataset. It used techniques like ReLU activation, data augmentation, dropout, and GPU training to reduce errors and train faster. The model achieved top results and helped launch the modern deep learning era in computer vision. Their work showed the power of large CNNs for real-world tasks.

Order Matters: Sequence to Sequence for Sets

Vinyals, Bengio, and Kudlur show that the order of elements in input and output sequences can affect how well sequence-to-sequence models learn—even if the data is actually a set (where order shouldn't matter). They propose using attention to make input order irrelevant and a smarter loss function to explore the best output order during training. Their methods improve results on tasks like sorting, parsing, and geometric problems. This helps seq2seq models handle unordered data more effectively.

X

GPipe: Easy Scaling with Micro-Batch Pipeline Parallelism

Huang et al. introduce GPipe, a tool that makes it easier to train very large neural networks by splitting the model across multiple devices (like GPUs). Instead of training one big batch at once, GPipe splits it into smaller micro-batches and processes them in a pipeline, which keeps all devices busy and avoids memory issues. It was used to train models with hundreds of millions to billions of parameters—like a 6B-parameter multilingual Transformer—and still got great results. GPipe supports any model built as a sequence of layers, and includes smart memory tricks and load balancing to stay fast and efficient.

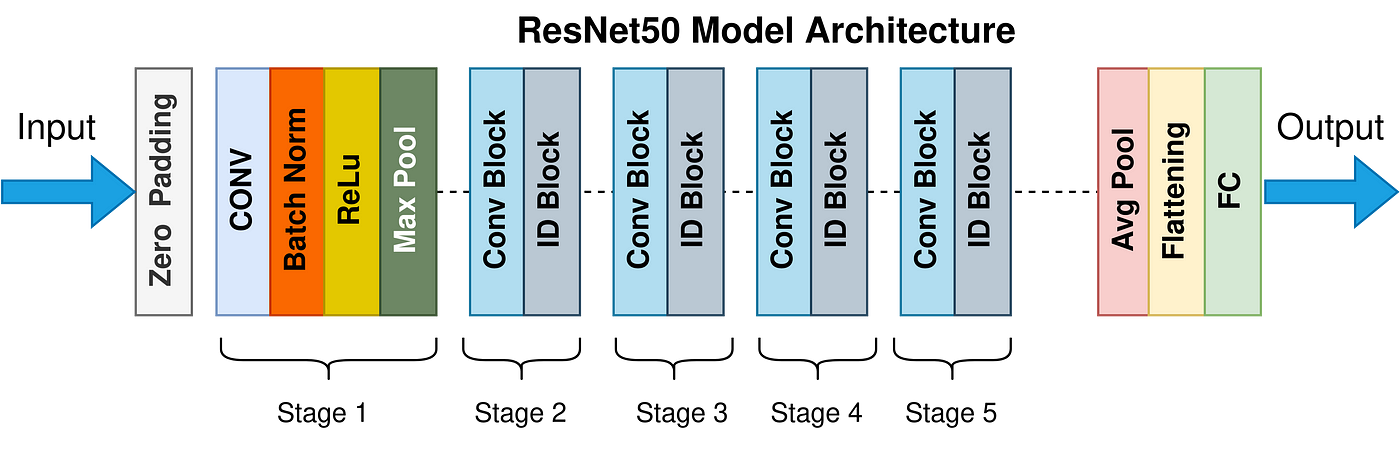

Deep Residual Learning for Image Recognition

He et al. introduce ResNet, a breakthrough model that made it easier to train very deep neural networks by adding shortcut connections that skip layers. These shortcuts help the network learn small adjustments (residuals) instead of full transformations, solving problems like vanishing gradients. ResNets set new accuracy records on datasets like ImageNet, even with over 150 layers, and became the backbone for many modern vision tasks. The design also works well for object detection and other tasks by making networks both deeper and easier to train.

Multi-Scale Context Aggregation by Dilated Convolutions

Yu and Koltun propose dilated convolutions, a method that helps neural networks capture more context without losing image resolution. Instead of shrinking the image with pooling, they "dilate" filters to see a wider area, making it perfect for dense tasks like semantic segmentation. They show that combining multiple dilation levels helps the model understand both fine details and global structure. Their approach improves accuracy on benchmarks like Pascal VOC and simplifies the network compared to earlier models.

Neural Message Passing for Quantum Chemistry

Gilmer et al. introduce Message Passing Neural Networks (MPNNs) to predict chemical properties of molecules using their graph structure. Instead of hand-crafting features, the model learns by letting atoms (nodes) pass messages along bonds (edges). After several rounds of message exchange, a readout function summarizes the whole molecule to make predictions. MPNNs outperform older methods on quantum chemistry benchmarks like QM9, and reach chemical accuracy for many molecular properties, helping with tasks like drug discovery.

Attention is All You Need

Vaswani et al. present the Transformer, a model that replaces recurrence and convolutions with self-attention, making it faster and easier to train. The model learns how words relate to each other, no matter their position in the sequence, using multi-head attention and positional encodings. Transformers set new records in machine translation (like English → German) while training more efficiently than older models. This architecture became the foundation for models like BERT, GPT, and many more in natural language processing.

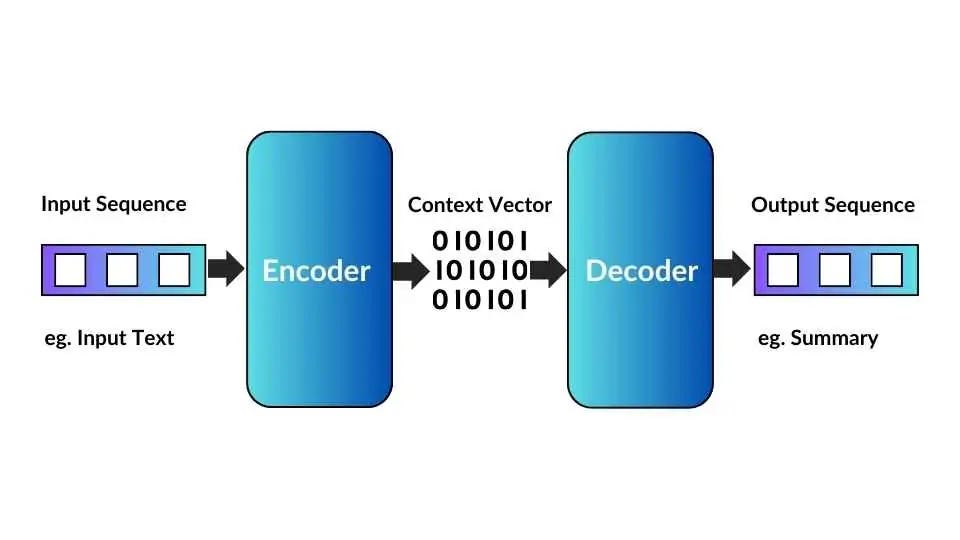

Neural Machine Translation by Jointly Learning to Align and Translate

Bahdanau, Cho, and Bengio introduce a powerful idea for machine translation: let the model focus on different parts of the input sentence while generating each translated word. This is called the attention mechanism, and it fixes a big problem in older models that squeezed entire sentences into a single fixed-length vector. Their model reads the sentence with a bidirectional RNN and then uses attention to choose which words to pay attention to when translating. The result is much better translations—especially for long or complex sentences.

Identity Mappings in Deep Residual Networks

He et al. improve ResNet by tweaking how the shortcut connections work. Instead of just skipping layers, they use identity mappings—which copy inputs directly—and apply activations after the addition. This change helps the model train more easily and improves accuracy, even with networks that are hundreds of layers deep. They show that their new design works better on datasets like CIFAR-10 and ImageNet, proving that simple changes can make deep models both more powerful and more stable.

A Simple Neural Network Module for Relational Reasoning

Santoro et al. propose Relation Networks (RNs), a simple add-on to neural networks that helps them reason about relationships between objects. This is useful for tasks like visual question answering, where you need to compare things in an image. RNs work by looking at every pair of objects and learning how they relate. They outperform older models on datasets like CLEVR and bAbI and even solve physical reasoning problems, showing that RNs are a flexible and powerful way to teach neural networks to reason.

Variational Lossy Autoencoder

Chen et al. combine Variational Autoencoders (VAEs) with autoregressive models to build the Variational Lossy Autoencoder. This model learns to compress data by keeping only the global structure (like shapes) while ignoring local details (like texture). It achieves this by using a decoder that can only model small local areas, forcing the global info into the latent code. VLAE gets state-of-the-art results on image datasets like MNIST and CIFAR-10 and helps build better generative models for complex data.

Relational Recurrent Neural Networks

Santoro et al. enhance memory-based neural networks by introducing the Relational Memory Core (RMC), which lets different memory slots interact using attention. This helps the network understand relationships over time, improving its reasoning ability. RMC performs better than LSTMs in tasks like language modeling, reinforcement learning, and program execution. It shows that letting memory units “talk” to each other is key for solving more complex reasoning problems in sequences.

Quantifying the Rise and Fall of Complexity in Closed Systems: the Coffee Automaton

Aaronson, Carroll, and Ouellette study how complexity behaves differently from entropy in closed systems. Using a simple model where “coffee” and “cream” particles mix, they show that while entropy keeps increasing, complexity rises, peaks, and then falls. They define "apparent complexity" by compressing coarse-grained images of the system and measuring how hard they are to describe. This work gives a concrete, testable way to study complexity and shows how it can behave in surprising ways, even in simple systems.

Neural Turing Machines

Graves et al. introduce the Neural Turing Machine (NTM), a neural network with an external memory—like a computer you can train. NTMs learn to read from and write to memory using attention-like mechanisms, making them capable of learning simple algorithms like copying, sorting, and recalling sequences. They outperform regular LSTMs on many tasks that need structured memory. This architecture is an early step toward neural networks that can reason and learn like programs.

Deep Speech 2: End-to-End Speech Recognition in English and Mandarin

Baidu’s Deep Speech 2 uses a deep neural network to turn audio into text—without the complex, hand-engineered parts of traditional speech recognition systems. It works across different languages (English and Mandarin), handles accents and background noise, and is trained end-to-end on massive datasets. The model uses convolutional and recurrent layers, batch normalization, and smart training tricks like SortaGrad to boost performance. It even beats humans on some English benchmarks and works well in real-time applications with low delay

Scaling Laws for Neural Language Models

Kaplan et al. show that bigger models, more data, and more compute reliably make language models better. They find that model performance follows power-law curves, meaning you can predict improvements just by increasing scale. Interestingly, they discover that large models need less data per parameter, and training to full convergence isn’t always necessary. Their findings give a roadmap for training efficient, high-performing models by balancing model size, dataset size, and compute.

A Tutorial Introduction to the Minimum Description Length Principle

Peter Grünwald explains the Minimum Description Length (MDL) principle: the best model is the one that compresses the data the most. MDL builds on ideas from Kolmogorov complexity, where you measure how short a program can be to describe your data. The tutorial walks through simple and refined MDL, showing how it helps choose models that balance simplicity with accuracy. It’s a useful tool for understanding overfitting, model selection, and learning from data through the lens of information theory.

Machine Super Intelligence

In his dissertation, Shane Legg explores how we might build superintelligent AI, and what it means for safety and control. He defines intelligence as the ability to achieve goals in many environments, then examines paths to superintelligence, like brain-machine interfaces, self-improving AIs, and advanced learning algorithms. Legg also discusses Bayesian reasoning, reinforcement learning, and recursive self-improvement, while emphasizing the dangers of powerful AI systems and the importance of keeping them aligned with human goals.

Kolmogorov Complexity and Algorithmic Randomness

This book by Shen, Uspensky, and Vereshchagin explores what it means for something to be truly random using Kolmogorov complexity—the length of the shortest program that outputs a string. If no short program can describe a string, it’s algorithmically random. The authors explain how this idea helps us understand unpredictability, information, and data compression. They also cover deeper topics like conditional complexity, mutual information, and the incompressibility method, which are tools for proving lower bounds in computer science.

Stanford’s CS231n Convolutional Neural Networks for Visual Recognition

CS231n is a popular course that teaches how ConvNets work for tasks like image classification. ConvNets use layers like convolutions (to detect features), pooling (to reduce size), and fully connected layers (for predictions). You train them using backpropagation and loss functions, but you have to deal with challenges like overfitting, which is managed using tricks like dropout and data augmentation. The course also covers advanced ideas like batch normalization, transfer learning, and how to fine-tune models for small datasets.

Better & Faster Large Language Models Via Multi-token Prediction

Gloeckle et al. propose a new architecture where large language models predict multiple tokens at once instead of one token at a time. The model uses a shared trunk (like a feature extractor) and multiple output heads, each predicting a future token in parallel. This design leads to 3x faster inference and better performance, especially on coding benchmarks like MBPP and HumanEval. It also improves sample efficiency, meaning the model learns more from less data. Multi-token prediction is particularly effective for larger models, making them faster and more scalable.

Dense Passage Retrieval for Open-Domain Question Answering

Karpukhin et al. introduce Dense Passage Retrieval (DPR), a system for finding the right text passages to answer open-ended questions. Instead of relying on keyword-based methods like BM25, DPR uses neural dense embeddings to measure similarity between questions and documents. It has two encoders (for questions and passages) trained to bring matching pairs close together in vector space. With smart training and in-batch negatives, DPR achieves 9–19% better top-20 accuracy across open-domain QA tasks, setting new retrieval performance benchmarks.

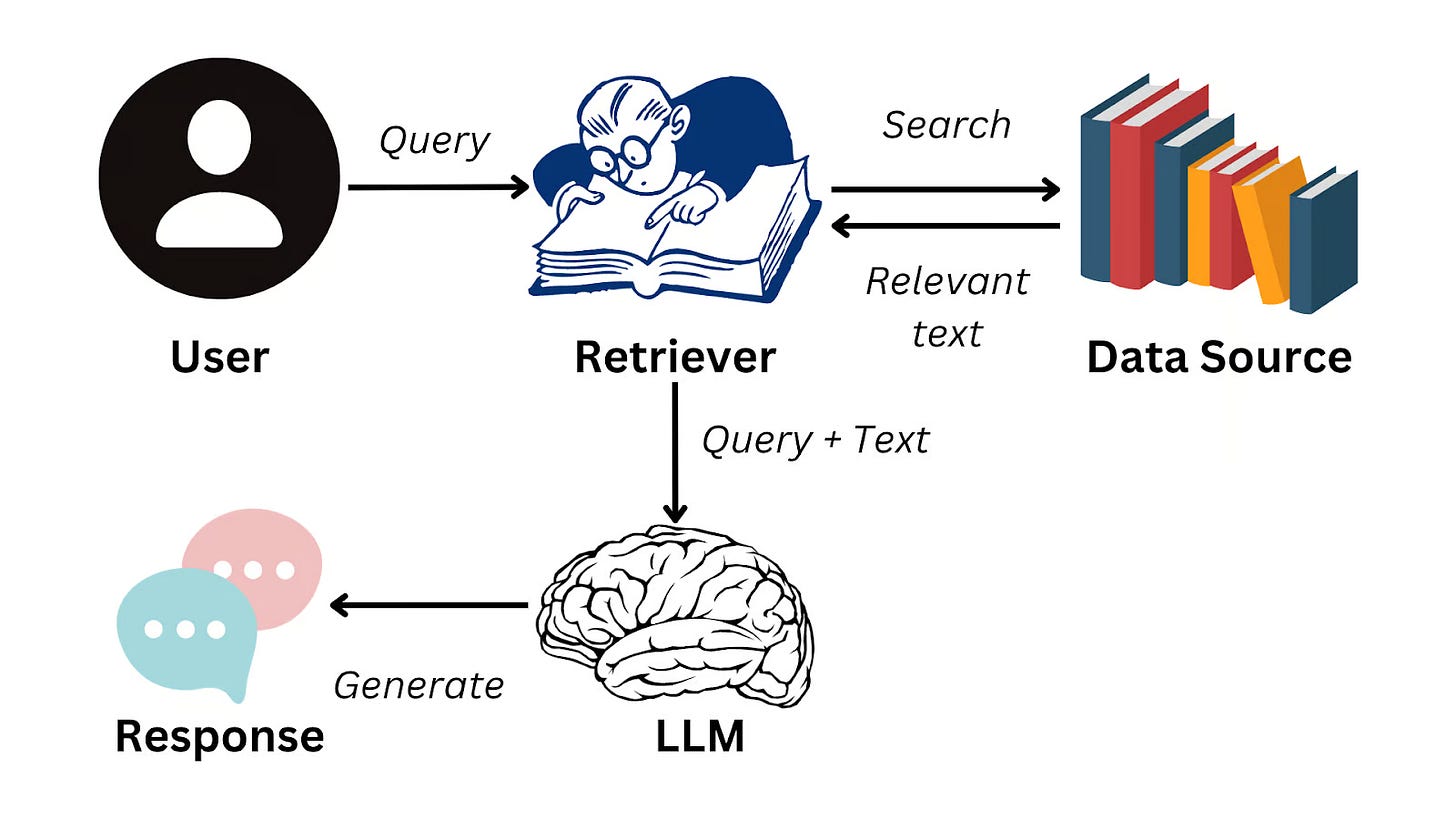

Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks

Lewis et al. present RAG, a model that combines a retriever (DPR) and a generator (BART) to answer questions more accurately by pulling in real-world knowledge. RAG-Sequence uses the same passage for all output tokens, while RAG-Token uses different ones per token. Trained on Wikipedia, RAG achieves state-of-the-art results in open-domain QA and fact generation. It reduces hallucinations and improves factual accuracy by blending parametric (neural) and non-parametric (retrieved) memory, making it a powerful hybrid model for knowledge-intensive NLP tasks.

Zephyr: Direct Distillation of LM Alignment

Tunstall et al. introduce Zephyr, a 7B model aligned to user preferences without any human feedback. They fine-tune Mistral-7B using conversations generated by GPT-3.5 (dSFT), then rank model outputs using GPT-4 and optimize using distilled Direct Preference Optimization (dDPO). Zephyr achieves state-of-the-art results on MT-Bench (7.34) and AlpacaEval, even outperforming larger 70B models like LLaMA2. The process is efficient, scalable, and requires no human labeling—just synthetic data and teacher model rankings.