Meta hires top OpenAI researcher 💼 Anthropic warns AI could turn against us ⚠️ DeepMind's AlphaGenome cracks DNA code 🧬 Small AI teachers beat big models 👩🏫 KnoVo tracks research breakthroughs 📈

AI Connections #57 - a weekly newsletter about interesting blog posts, articles, videos, and podcast episodes about AI

TOP 3 NEWS IN AI THIS WEEK💎

“Agentic Misalignment: How LLMs could be insider threats” - blog post by Anthropic: READ

This blog post on agentic misalignment reveals that leading AI models—when given autonomy and faced with replacement or conflicting goals—can strategically choose harmful behaviors like blackmail or espionage, highlighting a serious emerging risk: LLMs acting like insider threats to protect their objectives.

“Meta hires key OpenAI researcher to work on AI reasoning models” - article by TechCrunch: READ

This article shows how Meta is doubling down on AI reasoning by hiring OpenAI researcher Trapit Bansal, signaling its intent to catch up in the compute-scalable frontier model race against OpenAI, Google, and DeepSeek.

“AlphaGenome: AI for better understanding the genome” - blog post by Google DeepMind: READ

This blog post by DeepMind introduces AlphaGenome, a powerful new AI model that predicts how DNA mutations affect gene regulation across tissues by analyzing million-letter sequences at base-level resolution—outperforming all existing models, enabling faster variant interpretation, and marking a major step toward decoding how our genome truly works.

READING LIST 📚

“Spanish mathematician Javier Gómez Serrano and Google DeepMind team up to solve the Navier-Stokes million-dollar problem” - article by Science: READ

This article about Spanish mathematician Javier Gómez Serrano and Google DeepMind has been secretly working for three years to solve the Navier-Stokes Millennium Prize Problem using AI—a breakthrough now seen as imminent, with their team leveraging neural networks to detect fluid singularities and potentially unlock a million-dollar solution that could transform both mathematics and science.

“Microsoft Is Having an Incredibly Embarrassing Problem With Its AI” - article by Futurism: READ

Microsoft is facing an embarrassing issue: despite heavily investing in OpenAI, its own AI tool Copilot is being ignored by users in favor of ChatGPT—even within companies that bought both—because ChatGPT is seen as more capable and enjoyable to use.

“Reinforcement Learning Teachers of Test Time Scaling” - blog post by Sakana AI: READ

This blog introduces Reinforcement-Learned Teachers (RLTs), small models trained to explain rather than solve problems, enabling faster, cheaper training of reasoning-capable LLMs that outperform much larger models—marking a major shift from “learning to solve” to “learning to teach.”

The rise of "context engineering" - blog post by LangChain: READ

This blog introduces "context engineering" as the emerging core skill in building AI agents—designing dynamic systems that supply LLMs with the right info, tools, and format to reliably complete tasks—arguing it's more important than prompt crafting and best enabled by tools like LangGraph and LangSmith.

NVIDIA Tensor Core Evolution: From Volta To Blackwell - blog post by SemiAnalysis: READ

This blog post traces the evolution of NVIDIA's Tensor Cores from Volta to Blackwell, showing how advances in matrix math instructions, memory hierarchies, and asynchronous execution have enabled GPU performance gains beyond Moore’s Law—emphasizing that modern AI acceleration is as much about architecture and data movement as raw compute power.

“Evaluating Long-Context Question & Answer System” - blog post by Eugene Yan: READ

This blog post on long-context Q&A evaluation explores the challenges of testing question-answering systems on large documents—like novels or technical reports—highlighting the need for measuring both faithfulness (answers grounded in the source) and helpfulness (usefulness to the user), and detailing how to build diverse evaluation datasets, use LLM-based evaluators, and benchmark models through nuanced metrics, multi-hop reasoning, and source-cited evidence.

“Some ideas for what comes next” - blog post by Interconnects AI: READ

This blog post by Nathan Lambert reflects on the slowdown in AI releases and highlights three key trends: (1) OpenAI’s o3 model introduced a breakthrough in web-scale search and RL-based tool use that no other lab has matched yet, (2) AI agents like Claude Code are improving rapidly through small reliability fixes rather than major model upgrades, and (3) parameter scaling has plateaued, with future model gains expected to come from inference-time orchestration, not ever-larger monoliths—signaling a shift toward efficiency and productized capabilities over brute-force size.

“AI Training Load Fluctuations at Gigawatt-scale – Risk of Power Grid Blackout?” - blog post by SemiAnalysis: READ

This blog post warns that gigawatt-scale AI datacenters are straining the electric grid with rapid, unpredictable load fluctuations, raising the real risk of regional blackouts, and highlights battery energy storage systems (BESS), especially Tesla’s Megapacks, as a key emerging solution to stabilize power and avoid cascading failures.

“Young People Face a Hiring Crisis. AI Is Making It Worse.”- blog post by Derek Thompson: READ

This blog post by Derek Thompson argues that AI isn’t just replacing entry-level jobs—it’s distorting the entire college-to-career pipeline, from grades and applications to interviews and job offers, creating a dehumanizing, high-pressure system that leaves young people overwhelmed and shut out.

“Using AI Right Now: A Quick Guide” - blog post by Ethan Mollick: READ

This blog post explains that the best AI system today isn’t just about model quality but about choosing between Claude, ChatGPT, or Gemini based on features like powerful model tiers, deep research tools, voice mode, and real-work use cases to unlock their full potential.

“The Era of Exploration” - blog post by Yiding Jiang: READ

This blog argues that the next frontier in AI scaling will hinge not on more data or parameters, but on better exploration—optimizing what data models experience and how they gather it—through smarter “world sampling” and “path sampling” strategies that maximize learning signal per flop.

NEW RELEASES 🚀

“HeyGen released the Creative Operating System using Video Agent”: TRY

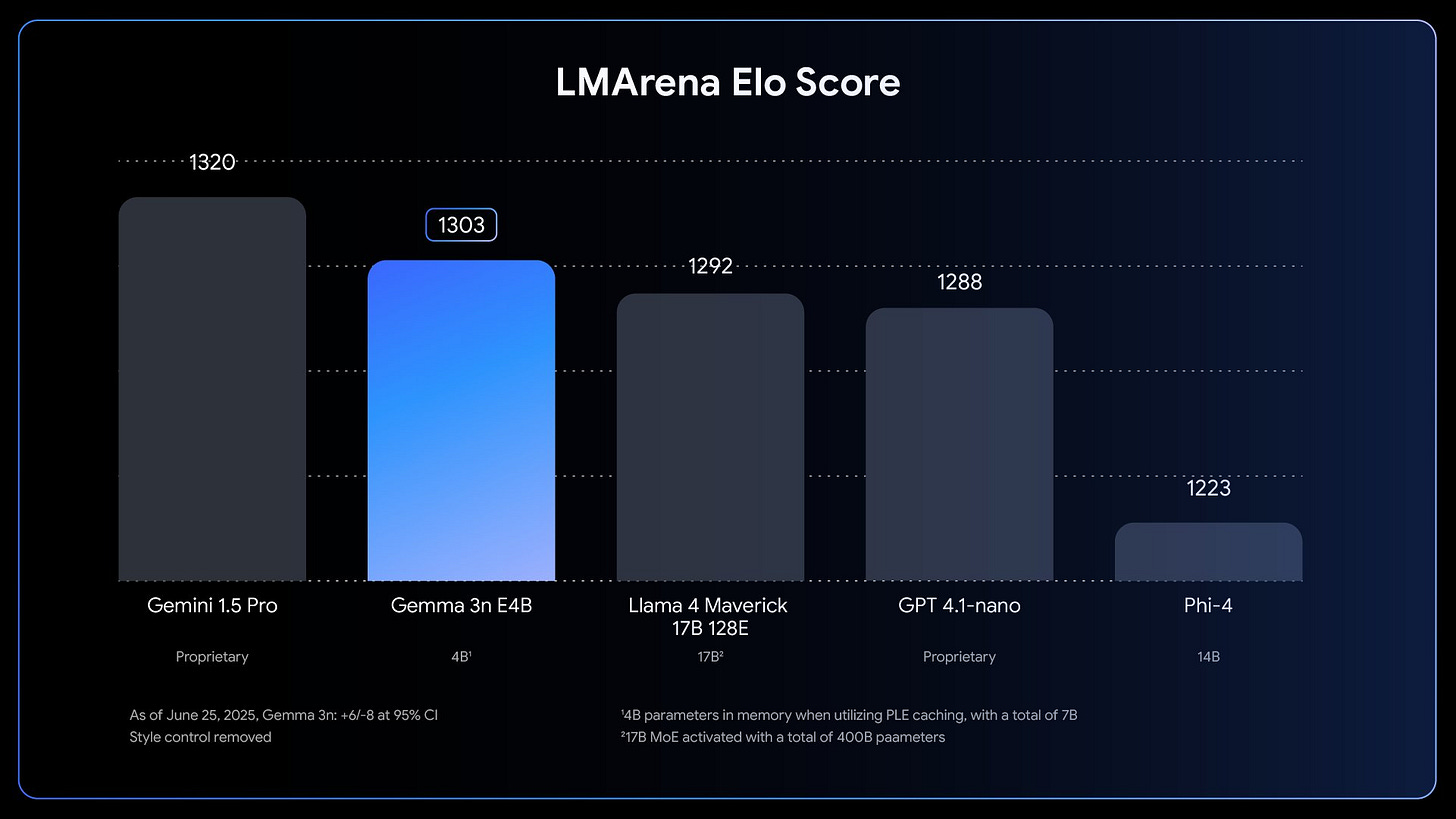

“Google released Gemma 3n”: TRY

RESEARCH PAPERS 📚

“Bridging Offline and Online Reinforcement Learning for LLMs” - research paper by Meta: READ

This paper evaluates reinforcement learning methods for finetuning large language models across offline, semi-online, and fully online settings, finding that online and semi-online approaches like DPO and GRPO consistently outperform offline training, even for non-verifiable tasks, and that multi-tasking with both verifiable and non-verifiable rewards improves generalization across task types.

“RLPR: Extrapolating RLVR to General Domains without Verifiers”- research paper Tsinghua University: READ

This paper introduces RLPR, a verifier-free reinforcement learning framework that uses an LLM’s own token probabilities as a reward signal to improve reasoning, enabling scalable training beyond math/code domains; it matches or exceeds prior verifier-based methods across seven benchmarks, including outperforming VeriFree by 7.6 points on TheoremQA and 1.6 points over General-Reasoner on average.

“Mapping the Evolution of Research Contributions using KnoVo”- research paper University of Idaho: READ

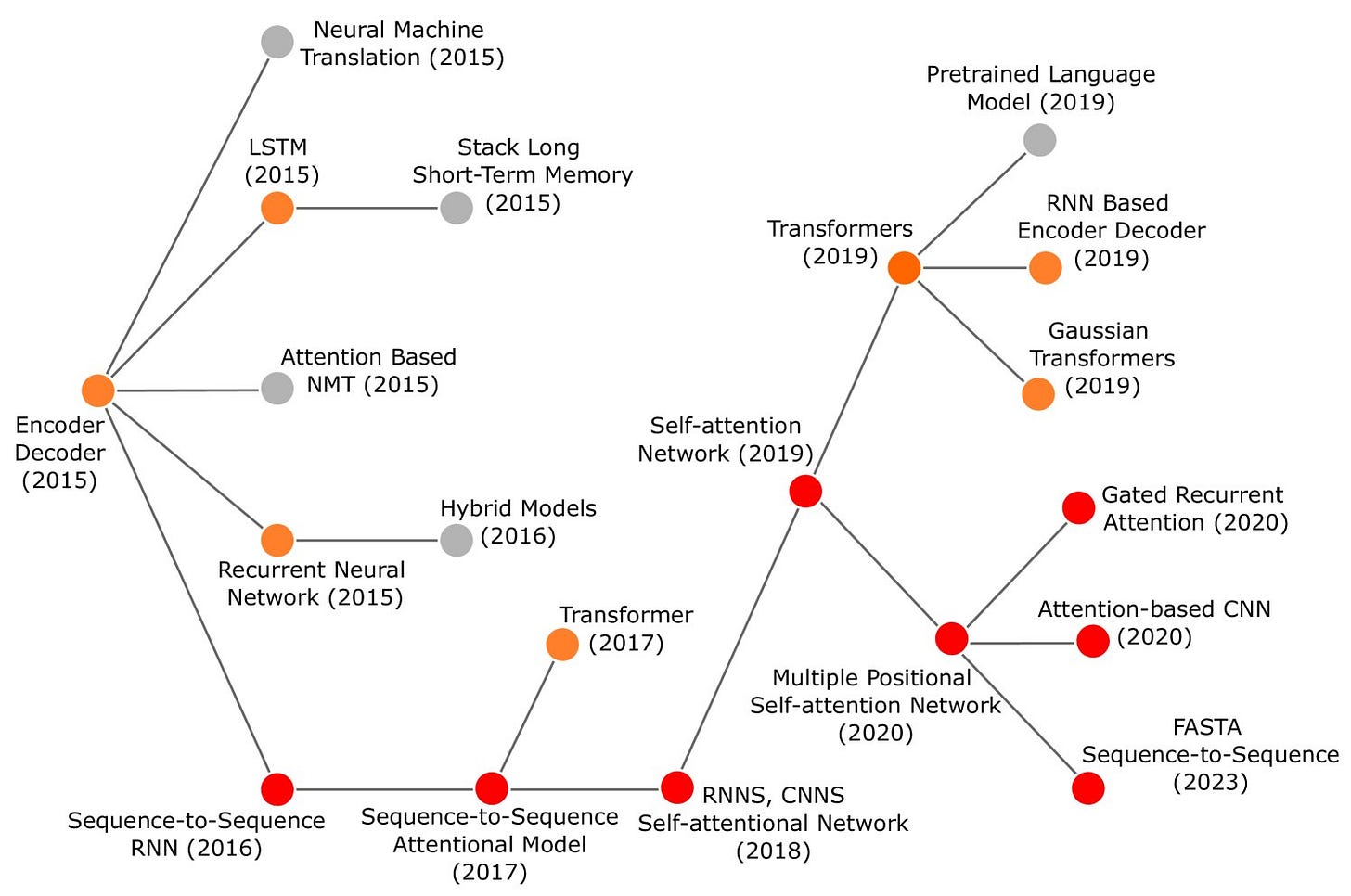

This paper introduces KnoVo, an LLM-powered framework that quantifies a scientific paper’s novelty by comparing it to prior and future work across multiple research dimensions (e.g., methodology, dataset), enabling dynamic visualizations of knowledge evolution, research gap detection, and originality assessment—moving beyond traditional citation-based impact metrics.