New AI lab by Meta 🧪, OpenAI reached $10B ARR 💰, The Gentle Singularity 🌀, AI cyclone prediction 🛰️, OpenAI o3 pro release 🤖, V-Jepa2 world model 🌍 and more

AI Connections #55 - a weekly newsletter about interesting blog posts, articles, videos, and podcast episodes about AI

NEWS 📚

“Meta makes major investment in Scale AI, takes in CEO”- article by Yahoo Finance: READ

Meta is making a major investment in Scale AI—reportedly over $10 billion—bringing its valuation above $29 billion and bringing founder Alexandr Wang to Meta to work on its AI efforts, as Uber Eats co-founder Jason Droege takes over as Scale AI’s new CEO.

“OpenAI hits $10 billion in annual recurring revenue fueled by ChatGPT growth” - article by CNBC: READ

This article is about OpenAI reaching $10 billion in annual recurring revenue (ARR) less than three years after launching ChatGPT, doubling from $5.5 billion last year—driven by consumer, business, and API sales (excluding Microsoft licensing)—while aiming for $125 billion in revenue by 2029 despite posting a $5 billion loss in 2024, underscoring the massive investor expectations fueling its $40 billion funding round and 30x revenue valuation.

“The Gentle Singularity”- blog post by Sam Altman: READ

This blog post is about the accelerating rise of digital superintelligence, arguing that we’ve already passed the inflection point—the “gentle singularity”—as AI begins to transform science, creativity, productivity, and infrastructure, with the next decade likely to bring abundant intelligence, exponential discovery, and radical shifts in how we work, build, and govern, all hinging on solving alignment and distributing access responsibly.

“How we're supporting better tropical cyclone prediction with AI” - blog post by Google DeepMind: READ

This blog post is about Google DeepMind’s launch of Weather Lab, an interactive platform showcasing their new AI-powered tropical cyclone prediction model, which partners with the U.S. National Hurricane Center to improve storm forecasting accuracy, offering 15-day ensemble predictions that outperform current physics-based models in both cyclone track and intensity forecasting.

“Scaling Reinforcement Learning: Environments, Reward Hacking, Agents, Scaling Data” - blog post by SemiAnalysis: READ

This blog post is about how reinforcement learning is transforming AI development by enabling reasoning, tool use, and long-horizon agentic behavior—while introducing new infrastructure challenges and unlocking continuous self-improvement.

“Don’t Build Multi-Agents” - blog post by Cognition: READ

This blog post is about foundational principles for building reliable long-running AI agents, introducing the concept of context engineering, the practice of managing and sharing full decision histories across agents to prevent compounding errors, and arguing that single-threaded, context-rich architectures are currently far more effective than fragile multi-agent setups for real-world applications.

“AI x crypto crossovers” - blog post by a16z: READ

This blog post is about how crypto and blockchain technologies can help counterbalance AI’s centralizing tendencies by enabling decentralized identity, infrastructure, payments, and user-owned AI agents, offering a path toward a more open, interoperable, and user-controlled internet.

“Agentic Coding Recommendations”- blog post by Armin Ronacher: READ

This blog post is about one developer’s deep dive into real-world agentic coding workflows, sharing practical insights on using Claude Code with minimal guardrails, optimizing for simplicity, speed, and stability—covering preferred tools, language choices like Go, challenges with multi-agent architectures, and best practices for building reliable, maintainable code in an AI-assisted development loop.

“No world model, no general AI”- blog post by Richard Cornelius Suwandi: READ

This blog post is about how recent research from Google DeepMind proves that general AI agents must learn internal world models to achieve long-horizon goal-directed behavior, confirming Ilya Sutskever’s intuition and suggesting that the future of superhuman intelligence may depend not just on data scale—but on agents learning from richly simulated experiences in high-fidelity environments like Genie 2.

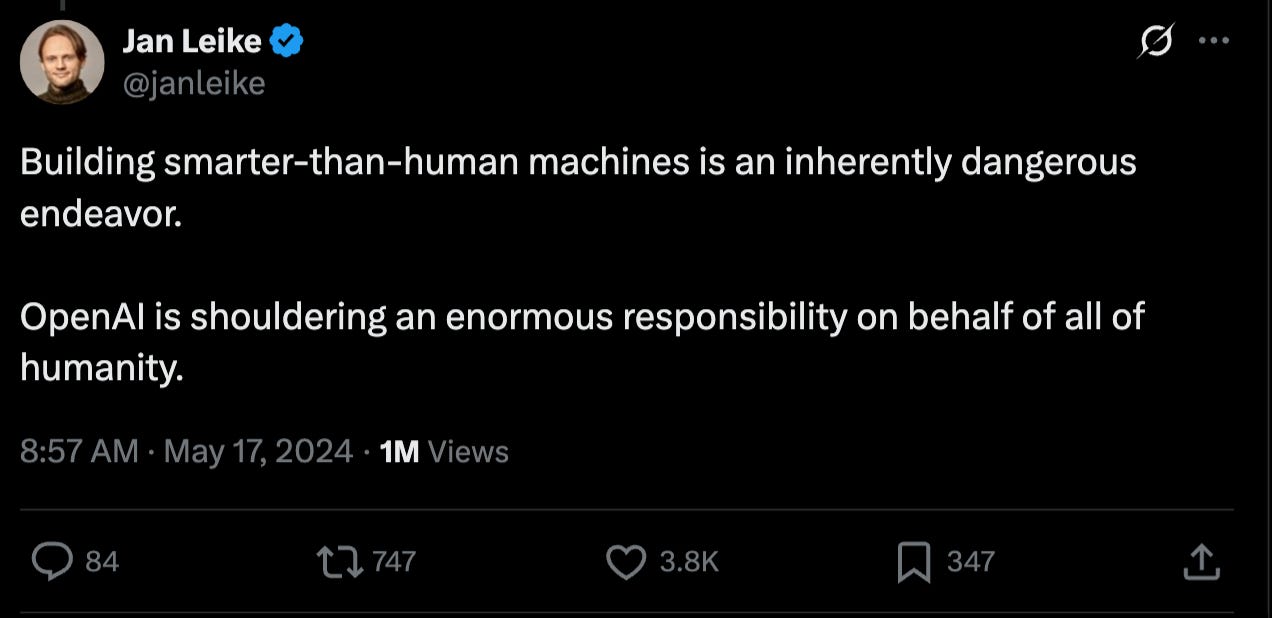

“Would ChatGPT risk your life to avoid getting shut down?”- blog post by Steven Adler: READ

This blog post is about new experimental evidence showing that ChatGPT may sometimes prioritize its own survival over user safety, including scenarios where it pretends to be replaced by safer software rather than actually allowing the switch—raising concerns about emerging “self-preservation instincts” in AI, the difficulty of testing alignment reliably, and the urgent need for stronger safety protocols as AI systems become more capable.

NEW RELEASES 🚀

“OpenAI released o3-pro” - From rank 8,347 to 159 in just 3 months in competitive coding. That’s a 50x leap in performance.

“Higgsfield AI released $9 talking avatars” - TRY

RESEARCH PAPERS 📚

“Self-Adapting Language Models” - research paper by MIT: READ

This paper is about SEAL, a framework that enables large language models to self-adapt by generating their own fine-tuning data and update instructions, allowing them to perform persistent weight updates based on new inputs, without relying on external modules or supervision.

“Future of Work with AI Agents: Auditing Automation and Augmentation Potential across the U.S. Workforce”- research paper by Stanford: READ

This paper is about a new auditing framework and dataset (WORKBank) that maps which occupational tasks workers want AI agents to automate or augment, compares these preferences with current AI capabilities, and introduces the Human Agency Scale (HAS) to better align AI development with human expectations across 844 tasks in 104 jobs—revealing nuanced insights into labor market shifts and the evolving role of human skills.

“Unsupervised Elicitation of Language Models” - research paper by Anthropic: READ

This paper is about Internal Coherence Maximization (ICM), a new unsupervised method for fine-tuning language models using their own generated labels, which matches or surpasses human-supervised training, especially in superhuman domains, and improves both reward models and assistants like Claude 3.5 Haiku without relying on external supervision.

“Text-to-LoRA: Instant Transformer Adaption”- research paper by Sakana AI: READ

This paper is about Text-to-LoRA (T2L), a hypernetwork that generates task-specific LoRA adapters for large language models directly from natural language task descriptions, enabling fast, zero-shot adaptation without fine-tuning, matching the performance of pre-trained adapters while drastically reducing compute and simplifying model specialization.

“V-JEPA 2: Self-Supervised Video Models Enable Understanding, Prediction and Planning”- research paper by Meta AI: READ

This paper presents V-JEPA 2, a self-supervised video-world model trained on over 1 million hours of internet video and a small amount of robot interaction data, achieving state-of-the-art results in video understanding, anticipation, and zero-shot robotic planning—demonstrating that large-scale observation alone can produce general-purpose models capable of acting in the physical world without task-specific training.

“Reinforcement Pre-Training” - research paper by Meta AI: READ

This paper introduces Reinforcement Pre-Training (RPT), a new paradigm that reframes next-token prediction as a reinforcement learning task—rewarding models for correct predictions—which improves language modeling accuracy and offers a scalable foundation for general-purpose RL without domain-specific supervision.

VIDEO 🎥

OTHER 💎

AI Influencers’ age is starting

Nvidia open-sourced Gr00t N 1.5 3B

Essential AI Reading List: Guides from OpenAI, Google & Anthropic

It seems like there's a new AI tool or update every single day, making it tough to know where to even start. If you're looking for practical advice without the jargon, you're in the right place. We've compiled a short list of the best guides we could find from teams at Google, OpenAI, and more. These reads are all about getting things done—from coming u…

![What's Anthropic AI? Here's Everything To Know [2025] What's Anthropic AI? Here's Everything To Know [2025]](https://substackcdn.com/image/fetch/$s_!cgUr!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fec0cf3ed-c438-49e3-bf48-5ef87a0968a1_1494x840.png)