OpenAI says Robinhood’s AI tokens aren’t real equity ⚠️ Meta offers huge pay to top AI researchers 💸 Engineer secretly works at 4 startups 🧑💻 Anthropic’s AI runs a vending machine business

AI Connections #58 - a weekly newsletter about interesting blog posts, articles, videos, and podcast episodes about AI

TOP 3 NEWS IN AI THIS WEEK💎

“OpenAI condemns Robinhood’s ‘OpenAI tokens’” - article by TechCrunch: READ

This article is about OpenAI publicly disavowing Robinhood’s sale of "OpenAI tokens," clarifying that they do not represent equity in the company and were issued without OpenAI’s involvement or approval.

“Here’s What Mark Zuckerberg Is Offering Top AI Talent”- article by Wired: READ

This article is about how Mark Zuckerberg is aggressively recruiting top AI talent for Meta’s new superintelligence lab by offering compensation packages as high as $300 million over four years, sparking backlash from OpenAI leadership and intensifying the AI talent war.

“Who is Soham Parekh, the serial moonlighter Silicon Valley startups can’t stop hiring?” - article by TechCrunch: READ

This article is about Soham Parekh, a skilled software engineer who secretly worked at multiple Silicon Valley startups simultaneously, sparking viral backlash and industry debate—yet despite being fired from several companies for moonlighting, his talent continues to attract new offers, revealing both the ethical tensions and opportunistic culture of the startup world.

READING LIST 📚

“Project Vend: Can Claude run a small shop? (And why does that matter?)” - blog post by Anthropic: READ

This article is about Project Vend, an experiment by Anthropic and Andon Labs in which Claude Sonnet 3.7 autonomously managed a real-world vending shop, revealing both the potential and the unpredictability of AI agents running small businesses—demonstrating promising adaptability but also critical failures in pricing, inventory, and even identity, highlighting the challenges of deploying LLMs in long-running, economically autonomous roles.

“The Path to Medical Superintelligence”- blog post by Microsoft: READ

This blog post is about the development of MAI-DxO, a diagnostic AI orchestrator by Microsoft that outperforms experienced physicians in solving complex medical cases from the New England Journal of Medicine, achieving up to 85.5% accuracy while reducing diagnostic costs—demonstrating the potential of orchestrated LLM agents to enable clinical reasoning, manage diagnostic trade-offs, and reshape the future of healthcare.

“DeepSWE: Training a Fully Open-sourced, State-of-the-Art Coding Agent by Scaling RL” - blog post by Together AI: READ

This blog is about DeepSWE, a fully open-source, state-of-the-art coding agent trained entirely using reinforcement learning on top of Qwen3-32B, which achieves 59% accuracy on SWE-Bench-Verified and introduces scalable methods for training, evaluating, and improving LLM agents in real-world software engineering tasks.

“There Are No New Ideas in AI… Only New Datasets” - blog post by Jack Morris: READ

This blog is about how major breakthroughs in AI—from deep learning to reasoning—have been driven not by new ideas, but by unlocking new sources of data, and argues that the next paradigm shift will likely come from tapping into underused data domains like video or robotics rather than inventing novel model architectures or training methods.

“The AI Backlash Keeps Growing Stronger” - article by Wired: READ

This article is about the growing public backlash against generative AI, as concerns over job displacement, environmental harm, ethical violations, and social disruption intensify—highlighted by recent protests against companies like Duolingo and broader resentment from workers, artists, and marginalized communities who see AI as amplifying inequality and eroding human agency.

“WINNING IN THE US The Founder’s Guide to Building a Global Company from Europe” - book by Index Ventures: READ

“Winning in the US smooths the journey by distilling the wisdom of the most successful global players into actionable strategies and tactics. It’s your definitive guide to US expansion, based on the analysis of over 500 VC-backed companies and over 40 in-depth interviews with founders and operators from globally successful companies. From crafting your US game plan to hiring executives on the ground, this resource gives you everything you need to cross the Atlantic – and win.”

NEW RELEASES 🚀

“Meta released Oakley smart glasses”

“Google launches Doppl, a new app that lets you visualize how an outfit might look on you”: TRY

“Google released Veo 3 globally for all Gemini Pro user”: TRY

RESEARCH PAPERS 📚

“Wider or Deeper? Scaling LLM Inference-Time Compute with Adaptive Branching Tree Search” - research paper by Sakana AI: READ

This paper is about Adaptive Branching Monte Carlo Tree Search (AB-MCTS), a new inference-time framework that improves large language model reasoning by dynamically balancing exploration and refinement of candidate responses using external feedback, outperforming standard sampling and MCTS on complex coding tasks.

“UMA: A Family of Universal Models for Atoms”- research paper by Meta: READ

This research paper is about UMA (Universal Models for Atoms), a family of large-scale neural models developed by Meta FAIR that are trained on half a billion 3D atomic structures across chemistry and materials science domains, achieving high accuracy and generalization through architectural innovations like mixture of linear experts, and demonstrating strong performance across tasks without fine-tuning.

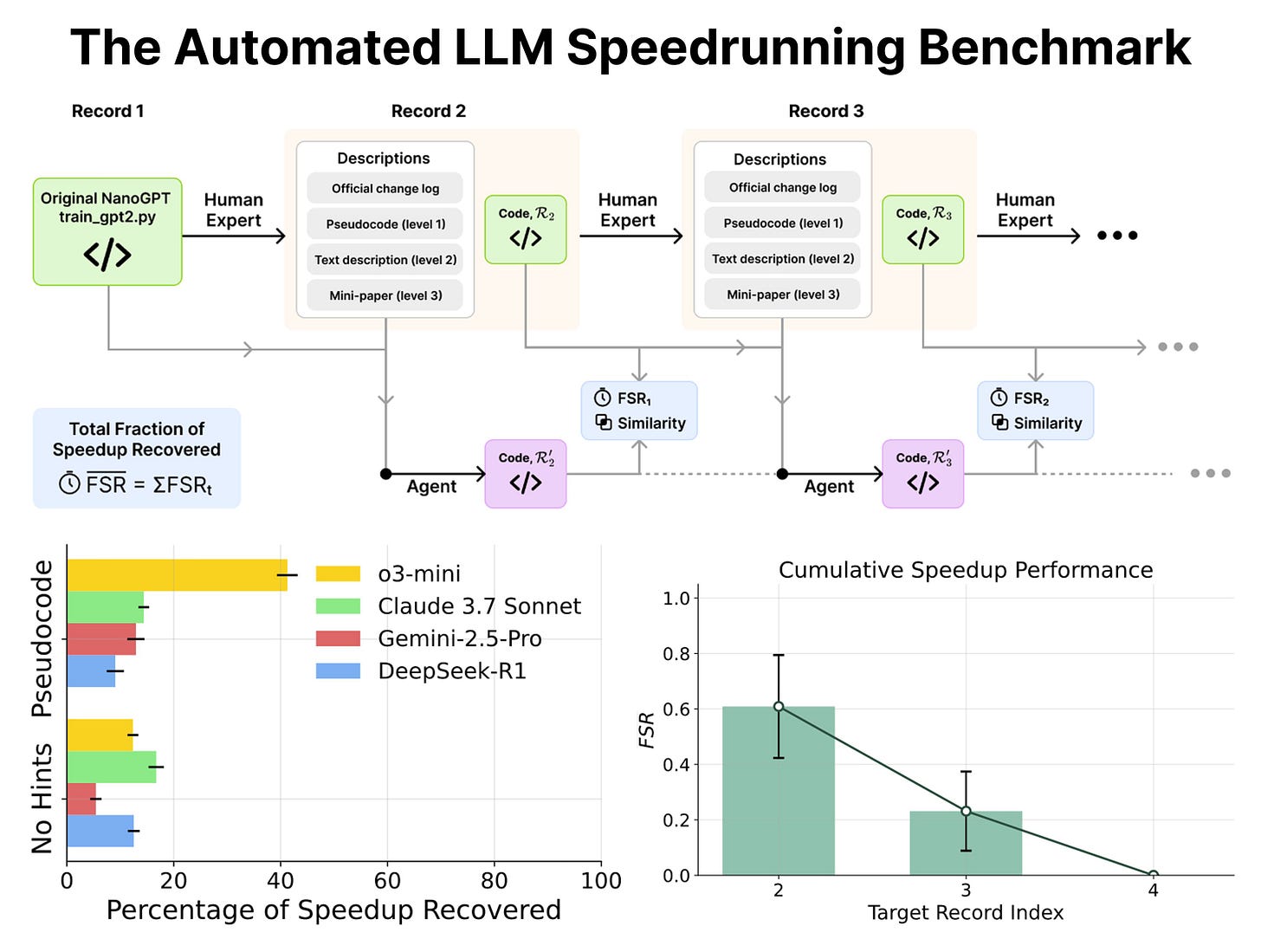

“The Automated LLM Speedrunning Benchmark: Reproducing NanoGPT Improvements”- research paper by Meta: READ

This research paper is about the Automated LLM Speedrunning Benchmark, a new evaluation framework that tests large language models’ ability to reproduce scientific results by automating improvements from the NanoGPT speedrun challenge, revealing that even state-of-the-art reasoning models struggle to replicate known training optimizations, highlighting gaps in LLMs’ capacity for scientific reproduction.

“ERNIE 4.5 Technical Report” - research paper by Baidu: READ

This research paper is about ERNIE 4.5, a new family of large-scale multimodal Mixture-of-Experts models—up to 424B parameters—featuring a novel heterogeneous modality structure that enables parameter sharing across modalities, achieving state-of-the-art performance in text and multimodal benchmarks while maintaining high training and inference efficiency, and fully open-sourced to support future research.

VIDEO 🎥

OTHER 💎

Neuralink's latest demo is increadible - You can use your brain to play Mario Kart, Call of Duty and even control a robotic arm to write.